- Latest Version 1.128

- Version 1.126

- SAPUI Version 1.124

- SAPUI5 Version 1.122

- SAPUI5 Version 1.120

- SAPUI5 Version 1.118

- SAPUI5 Version 1.116

- SAPUI5 Version 1.114

- SAPUI5 Version 1.112

- SAPUI5 Version 1.110

- SAPUI5 Version 1.108

- SAPUI5 Version 1.106

- SAPUI5 Version 1.104

- SAPUI5 Version 1.102

- SAPUI5 Version 1.100

- SAPUI5 Version 1.98

- SAPUI5 Version 1.96

- SAPUI5 Version 1.94

- SAPUI5 Version 1.92

- SAPUI5 Version 1.90

- SAPUI5 Version 1.88

- SAPUI5 Version 1.86

- SAPUI5 Version 1.84

- SAPUI5 Version 1.82

- SAPUI5 Version 1.80

- SAPUI5 Version 1.78

- SAPUI5 Version 1.76

- SAPUI5 Version 1.74

- SAPUI5 Version 1.70

- SAPUI5 Version 1.68

- SAPUI5 Version 1.66

- SAPUI5 Version 1.64

- SAPUI5 Version 1.62

- SAPUI5 Version 1.60

- SAPUI5 Version 1.58

- SAPUI5 Version 1.56

- SAPUI5 Version 1.54

- SAPUI5 Version 1.52

- SAPUI5 Version 1.50

- SAPUI5 Version 1.48

- SAPUI5 Version 1.46

- SAPUI5 Version 1.44

- SAPUI5 Version 1.42

- SAPUI5 Version 1.40

- SAPUI5 Version 1.38

- SAPUI5 Version 1.36

- SAPUI5 Version 1.34

- SAPUI5 Version 1.32

- SAPUI5 Version 1.30

- SAPUI5 Version 1.28

- SAPUI5 Version 1.26

- Latest Version 1.128

- Version 1.126

- SAPUI Version 1.124

- SAPUI5 Version 1.122

- SAPUI5 Version 1.120

- SAPUI5 Version 1.118

- SAPUI5 Version 1.116

- SAPUI5 Version 1.114

- SAPUI5 Version 1.112

- SAPUI5 Version 1.110

- SAPUI5 Version 1.108

- SAPUI5 Version 1.106

- SAPUI5 Version 1.104

- SAPUI5 Version 1.102

- SAPUI5 Version 1.100

- SAPUI5 Version 1.98

- SAPUI5 Version 1.96

- SAPUI5 Version 1.94

- SAPUI5 Version 1.92

- SAPUI5 Version 1.90

- SAPUI5 Version 1.88

- SAPUI5 Version 1.86

- SAPUI5 Version 1.84

- SAPUI5 Version 1.82

- SAPUI5 Version 1.80

- SAPUI5 Version 1.78

- SAPUI5 Version 1.76

- SAPUI5 Version 1.74

- SAPUI5 Version 1.72

- SAPUI5 Version 1.70

- SAPUI5 Version 1.68

- SAPUI5 Version 1.66

- SAPUI5 Version 1.64

- SAPUI5 Version 1.62

- SAPUI5 Version 1.60

- SAPUI5 Version 1.58

- SAPUI5 Version 1.56

- SAPUI5 Version 1.54

- SAPUI5 Version 1.52

- SAPUI5 Version 1.50

- SAPUI5 Version 1.48

- SAPUI5 Version 1.46

- SAPUI5 Version 1.44

- SAPUI5 Version 1.42

- SAPUI5 Version 1.40

- SAPUI5 Version 1.38

- SAPUI5 Version 1.36

- SAPUI5 Version 1.34

- SAPUI5 Version 1.32

- SAPUI5 Version 1.30

- SAPUI5 Version 1.28

- SAPUI5 Version 1.26

Explainable AI

Intro

One of the most important guiding design principles for intelligent systems is to empower end users to do their task efficiently. To help build trust between human and machine, we need to provide sufficient information about the underlying model and the reasoning behind the results of an algorithm. This aspect is even more critical in business applications, where users are held accountable for all decisions they make.

“Explainable AI” means explaining the reasoning behind intelligent system proposals in context and at the right time.

Usage

Consider using AI explanations if one or more of the following conditions apply:

- Criticality – There is a high risk associated with making the wrong decision. Once applied, actions are hard to reverse.

By contrast, if the risk is low and actions can easily be rolled back, users might not need an explanation for the system proposal.

- Complexity – It’s hard for users to assess the impact and quality of their decisions without further ado.

By contrast, if users can easily tell when a proposal fits (without training), they probably don’t need additional input.

- Transparency – Your business case is subject to extensive auditing requirements. Auditors must be able to trace transactions back and see the underlying reasoning for each execution step.

Note that auditors may require more information than end users.

By contrast, if there are no auditing requirements, explanations might not be necessary (assuming they are also not required by end users).

- Volatility – To adapt to changing conditions or requirements, your AI application relies on continuous feedback.

By contrast, if the feedback has little or no effect on the output of the algorithm or the user experience, it is more distracting than helpful to provide an additional explanation.

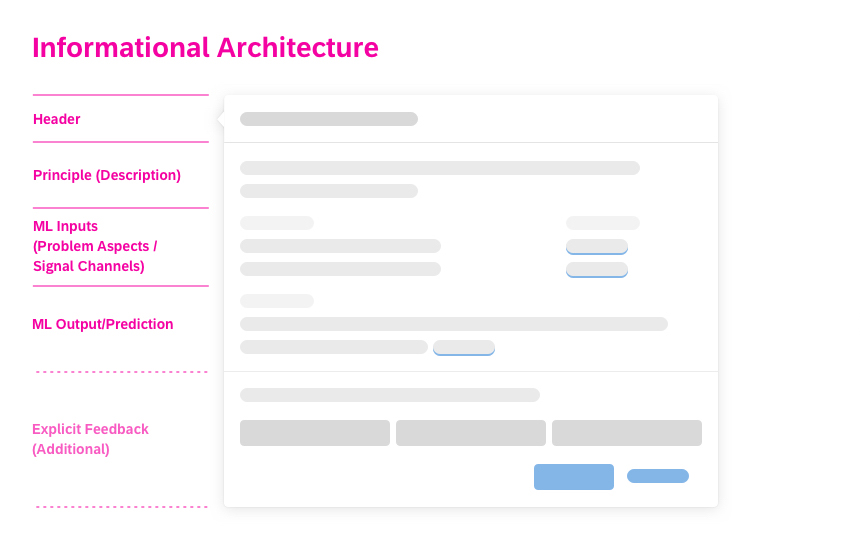

What Needs to be Explained?

When AI (machine learning) is introduced into applications, systems originally designed to “react” can become active players that propose, change or create elements in the interface. With this, fundamental UI concepts like objects, actions, or attributes are manipulated not only by humans, but also by the AI algorithms.

To avoid confusion and loss of control, and to ensure usability, users need to be aware of the AI working principles, actions, and consequences at any point in time. When designing AI services, we therefore recommend following our guiding principles for intelligent systems.

How Much Detail Do Users Need?

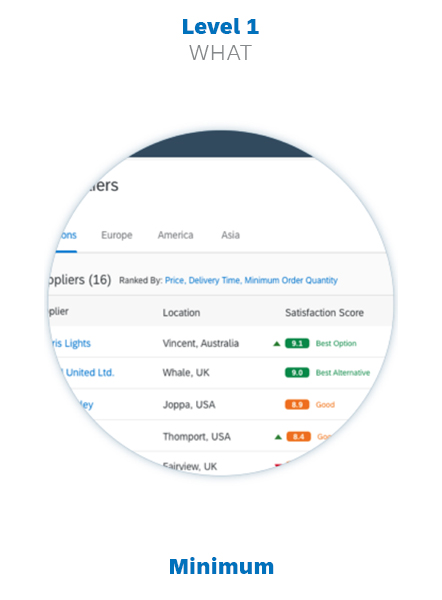

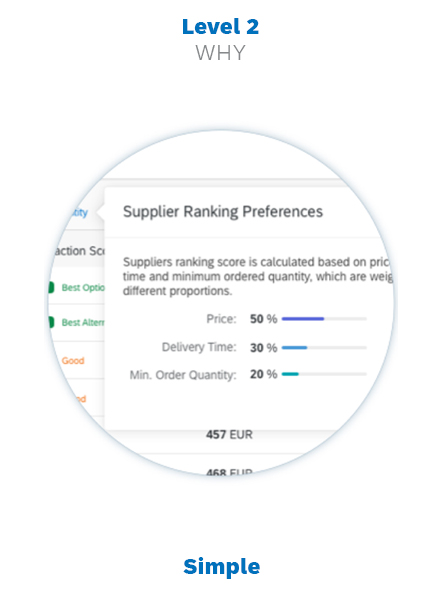

The amount of information users need to understand a system proposal can vary. This depends on the applied AI (machine learning), usage context, user task, and user role. We differentiate between three explanation levels: minimum, simple, and expert.

What does this mean in practice?

- Decide which explanation levels are applicable for your user profile. While some user roles might benefit from all levels (such as data scientists and software engineers), other users won’t need all levels.

- Use the explanation levels to apply progressive disclosure principles to your AI explanations.

- Think about how users will access each level. The indicators can look and behave differently, depending on user’s permission to access the next explanation level.

Progressive Disclosure

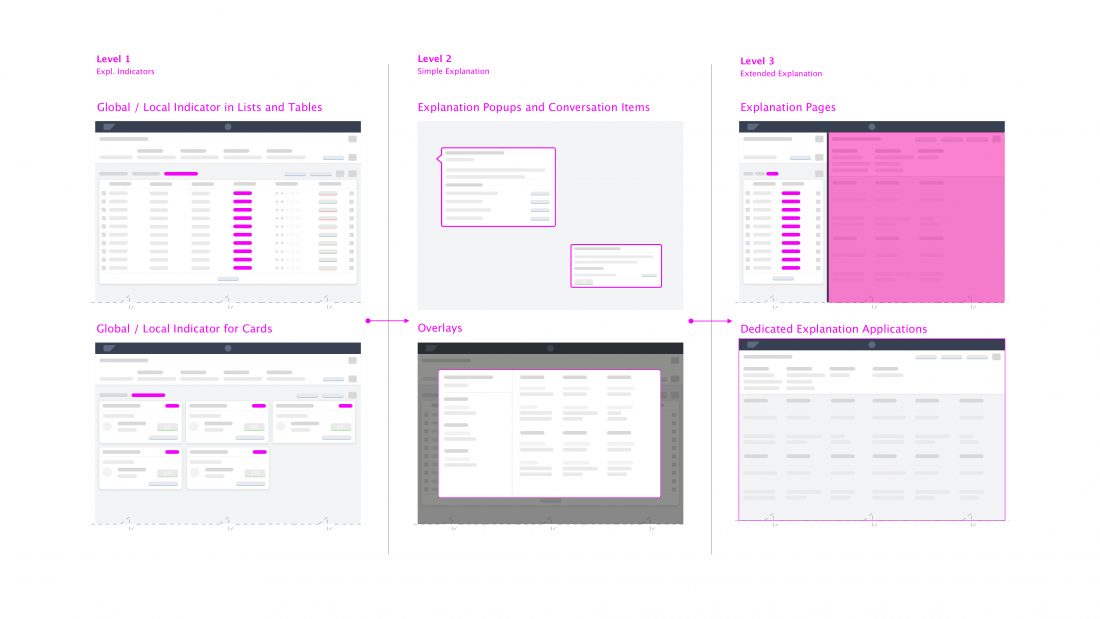

Use the progressive disclosure technique to avoid overwhelming users with too much information at once. Show the most frequently-used information in a concise form on the main screen (Explanation Level 1 – Explanation Indicator), with an option to drill down to more detailed information on secondary screens (Explanation Level 2 – Explanation Popover/Overlay). The benefit of this approach is that the explanations do not clutter the existing user interface too much, and users only need to concern themselves with details if they actually need them.

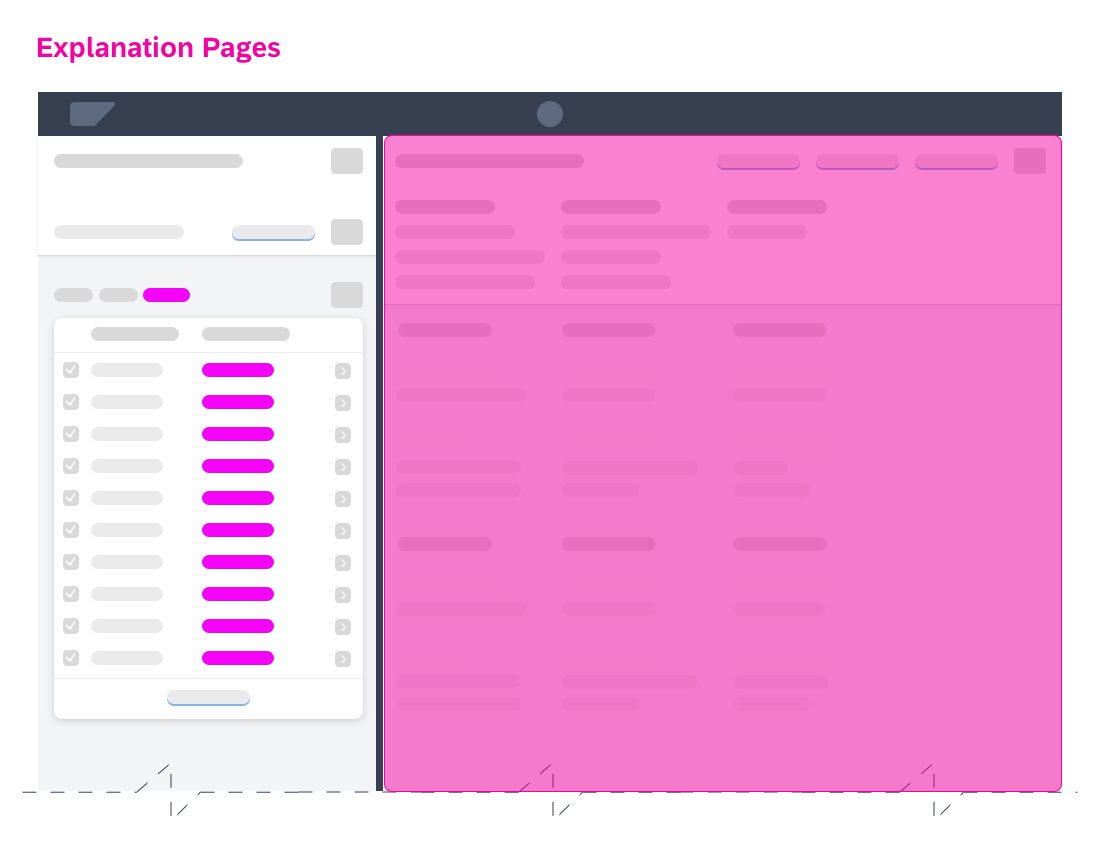

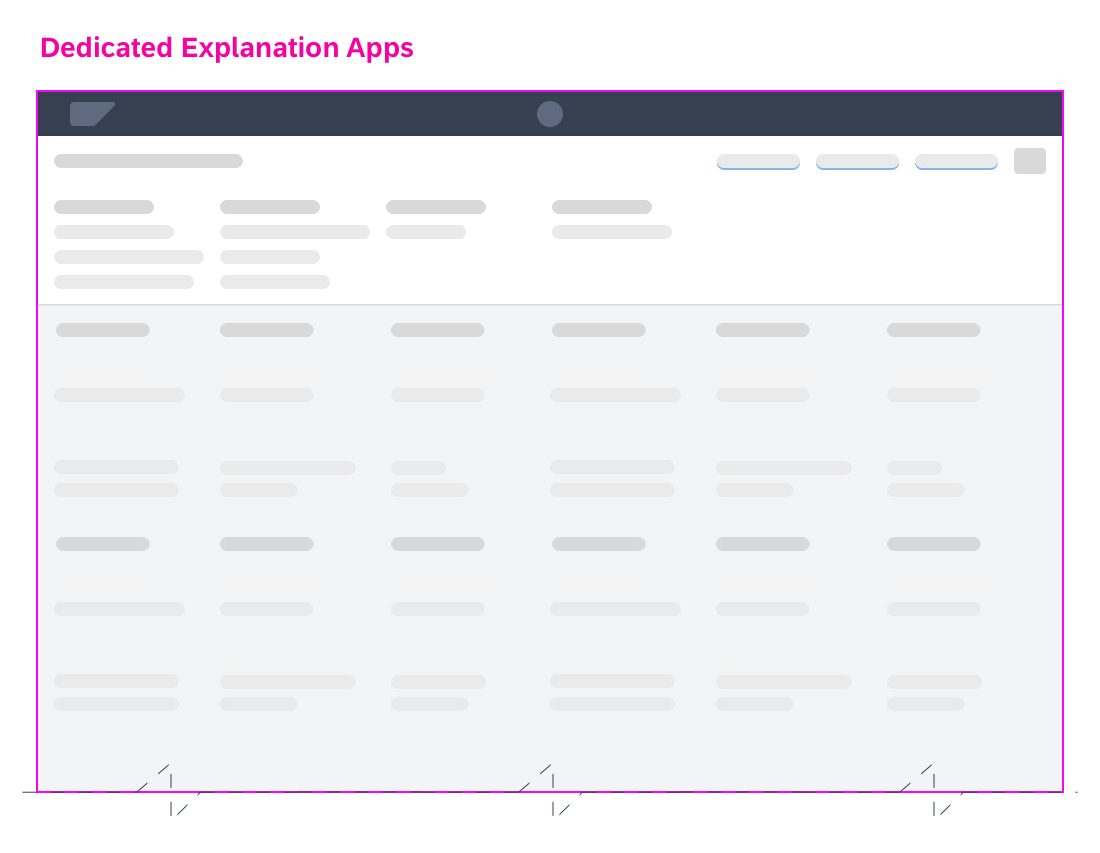

Use a separate page or app to offer a more demanding analysis of the AI, such as benchmarking or monitoring, or to let users make improvements (Explanation Level 3 – Extended Explanation Page/Monitor). Give access to this page or app via the explanation popover or by means of a dedicated tile on the SAP Fiori launchpad.

Overview of Progressive Disclosure Levels

| Level | Type of Information |

| Level 0 | No additional explanation elements. For cases where users do not expect or require explanation from the system. |

| Level 1 | Shows a short contextual explanation with or without the option to get more detailed information. This is used in simple cases where a short explanation clarifies the issue. |

| Level 2 | Presents condensed information, including tables, charts, or any other UI elements that offer more insight into the issue. Users access this level by clicking the explanation provided as a link in Level 1. |

| Level 3 | Presents detailed information including tables, charts, or any other UI elements that offer more insight into the issue. Users access this level by clicking the explanation provided as a link in Level 2. |

| Via Conversational UI | Not really part of the explanation concept, but gives users the option to engage SAP CoPilot (Ask CoPilot button or input field). |

Explanation Components

The UX pattern for explanations builds on the explanation levels above (What?/ Why?/ How?). There are dedicated UI components for each of these levels.

Level 1: Explanation Indicator

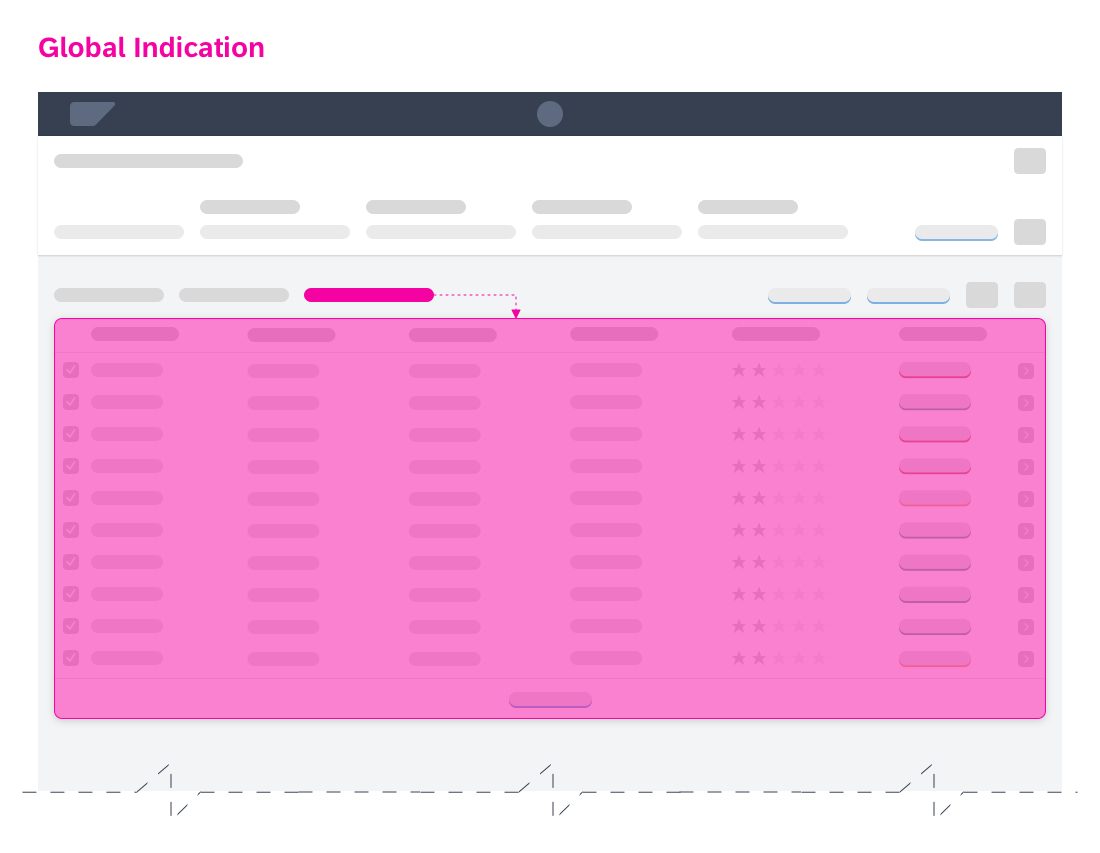

Indicator types mark elements or combinations of elements that are created or manipulated with the help of AI. They provide context about the “kind” and “quality” of a service provided. Explanation indicators can be global or local:

1. Global – At model level

Explains the AI output for a group of elements, such as lists, tables, or whole sections. The global explanation provides the overall context for more complex constellations affecting multiple objects or aspects.

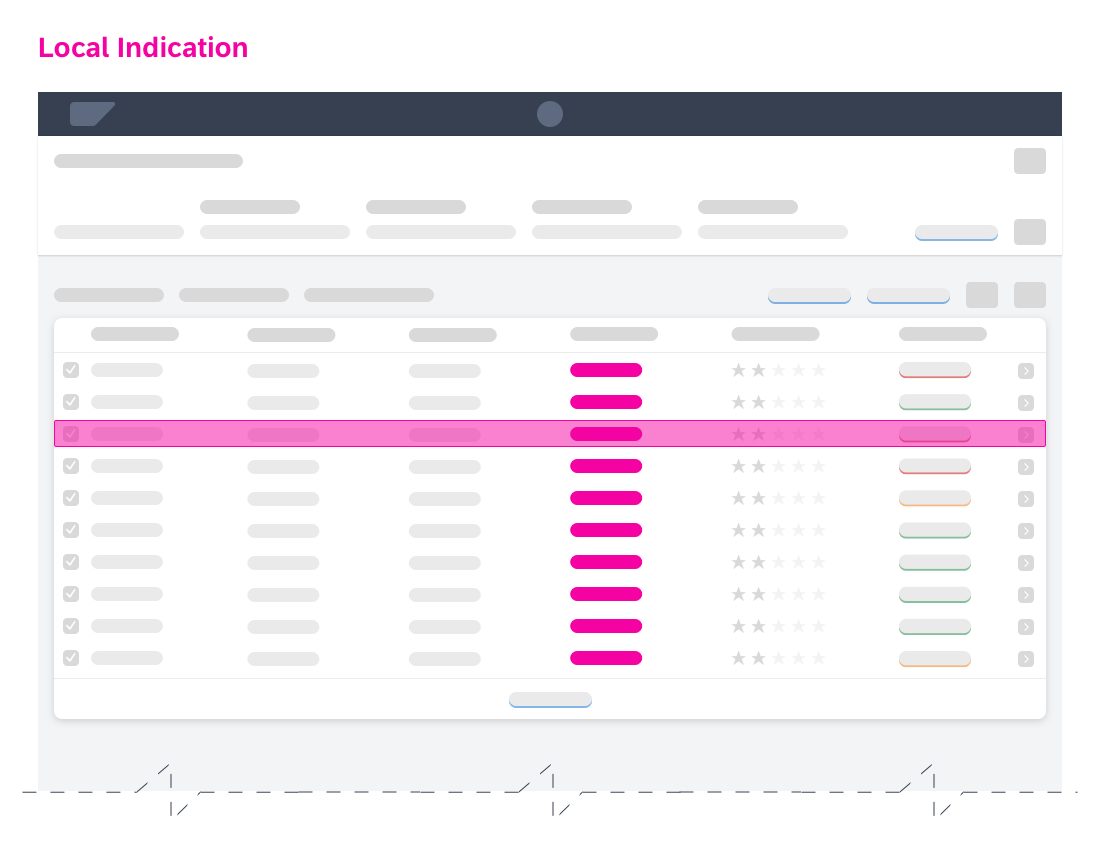

2. Local – At item level

Explains the AI output for single values or items.

It isn’t always necessary to provide explanations at both levels, but can be necessary if AI affects both the individual object and its position in the group.

Usage of Icons

Do users always need to know when a result comes from an intelligent algorithm? And if so, how should we convey this information?

Currently, we do not have any proven research to indicate that highlighting “UI intelligence” improves the user experience. As a result, we don’t recommend using icons or other “smartness labels” whenever the results of a machine learning algorithm are surfaced.

Indicator Styles

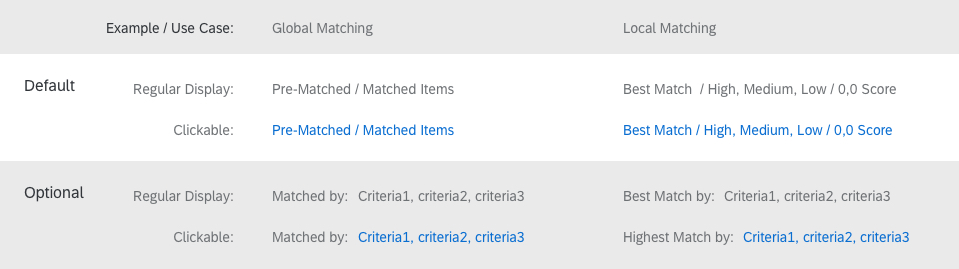

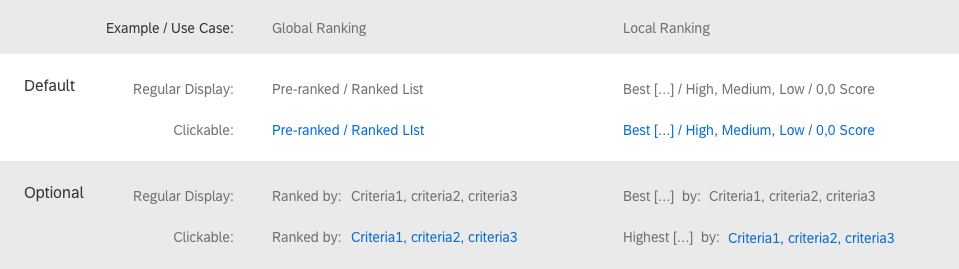

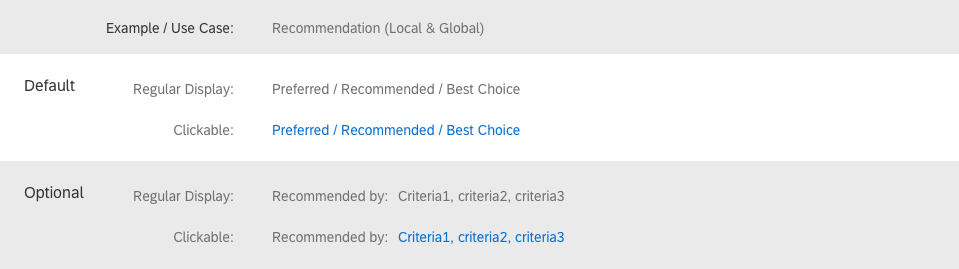

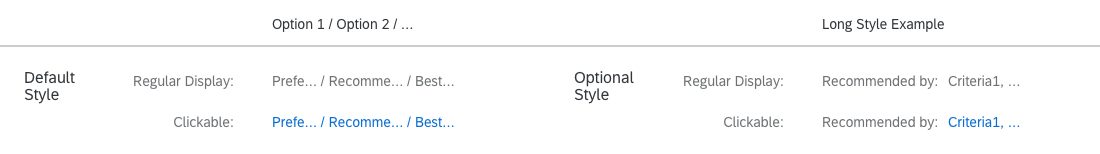

Explanation indicators can be displayed in two styles: a simple “default” style, and an optional “extended” alternative to offer some contextual insight at the first explanation level.

1. Default (for example, a label)

The default style provides a minimum indication of the rating or the quality of the AI output.

2. Extended (for example, label and parameters)

Extended indicators can show up to three key parameters (decision criteria) next to the default label.

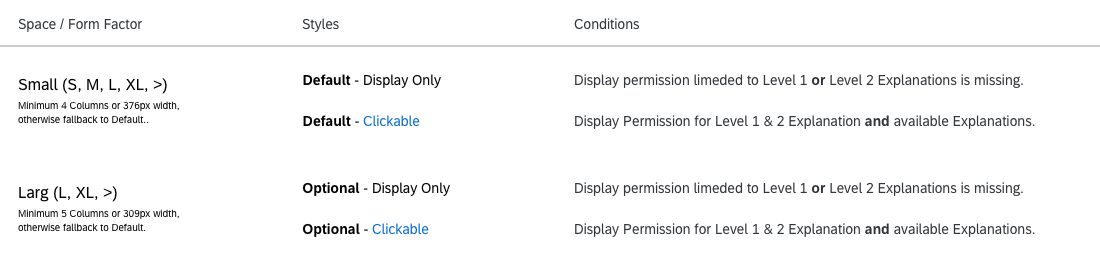

Explanation Indicator Variants

Style Conditions

Apply the following styles for the explanation indicators.

Display clickable explanation indicators if:

- The context is explained in more detail in level 2.

- The user needs access to the level 2 explanations and has the appropriate authorization.

To prevent truncated explanation indicators, apply the following rules:

- Only use the optional extended style if there is sufficient space and no collision with other UI elements.

- If you use the extended style, always offer a fallback to the default indicator for smaller screens or form factors.

- If you have no other options to free up space on the UI (for example, by moving other actions into the overflow), wrap the text.

For more information about responsive and adaptive design, see Multi-Device Support.

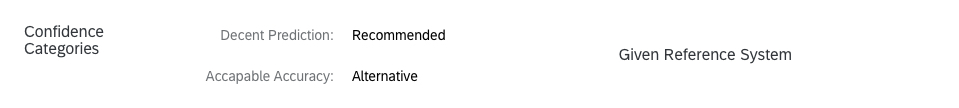

Confidence Indicator

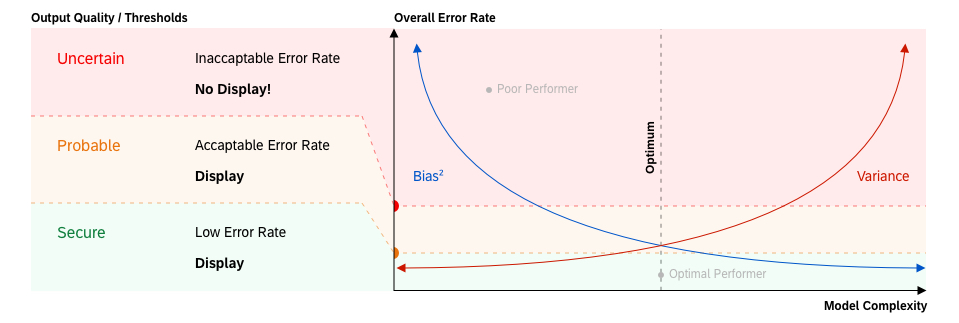

A confidence indicator is a variant of the explanation indicator, and is used to indicate the quality for an individual result.

A common approach in AI applications is to use percentage values to indicate the quality of a prediction. However, there is a usability issue with this method. People interpret confidence percentages differently, depending on their character and mood and the current situation. Some users might reject predictions with a relatively high confidence rating (like 84%). Others will go with a proposal despite a much lower confidence rating (like 64%). The percentage value for the confidence level has no bearing on the actual output. Poor user choices can also adversely affect learning algorithms. In this case, the machine learns from inefficient or misleading feedback.

To avoid this, we recommend using simplified prediction ratings, such as “Recommended”, “Preferred”, or “Best…”. With this approach, you also supply a reference system for interpreting the rating.

Nevertheless, in some scenarios you may want to emphasize the rating or ranking of items. In such cases, you can also use scoring indicators for local explanations (see the examples for indicator styles above). This is because the AI output (which is a score or rating) and the explanation indicator are same element in this scenario. For more information, see Ranking.

Level 2: Simple Explanation

Explanation Popover/Overlay

Users access the explanation popover by clicking the explanation indicator. The simple explanation provides contextual information beyond quality ratings, and lists or visualizes key factors, inputs, or criteria that led to the AI result. We recommend limiting this breakdown to 3-5 factors if there is no critical reason to have more. Users may not benefit from greater detail, since more conditional facts also trigger more questions and cross checks. To ensure a consistent experience, structure the explanation popover as described below.

Explanation popovers, screen overlays or conversational Items can include the following elements:

- Self-explanatory header

- Short explanation describing what the AI has performed in natural/human language

- Optional: A list of the most important parameters that influenced the result being explained. This can include a comparison to averages or reference values, if required.

- Optional: A short explanation in natural/human language of how the AI has performed in comparison to a given reference (historical cases, given data, average prediction accuracy, or similar). This can be combined with performance indicators or a trend.

- Optional: Feedback controls

Natural Language Explanations

Natural language explanations are a variation of AI explanations that apply conversational interaction principles as they are used in conversational interfaces. Reasoning and relations are represented in the form of sentences and non-technical speech.

Use natural language to summarize the overall situation and to explain dependencies, origins or principles. For more information about the conversational user interaction, see the guideline for SAP CoPilot.

To display the simple description, we recommend using plain text.

Content Guidelines

Make it easy to identify the most relevant information

- Use the most suitable format for conveying information. This can be language, visual elements, or a combination of both.

- In longer texts, emphasize important information. This makes it easier for users to scan the content quickly.

- Be careful with text formatting and visuals; do not use them for decoration only.

- Try to restrict text explanations to no more than 3 short sentences.

Reveal information progressively

- Add value with each disclosure level. Do not just repeat information from the previous level.

- Make sure that the information on each level is self-contained and that all elements form a natural flow.

- If your use case offers the Ask CoPilot entry field, think of a smooth transition to the CoPilot panel. Guide the user and offer a useful hint. Use the phrase “Try asking”, followed by the hint.

Best Practices for Explanation Texts

| Scenario | Examples |

| Adding value at each disclosure level | Level 1: Ranked By: Price, Delivery Time, Minimum Order Quantity

Level 2: |

| Specific information | “Polaris Lights” offers the best price (470 USD) for the product “Taillight”, with the lowest minimum order quantity (300 PC). Delivery (14 days) takes longer than average. Best price with lowest minimum order quantity. Delivery takes longer than average. |

| Just enough formatting | This is high priority because the net payment is due soon (in 3 days) and the amount is small (1.200,00 USD). Customer Electronics usually pays on time. This is high priority because the net payment is due soon (in 3 days) and the amount is small (1.200,00 USD). Customer Electronics usually pays on time. |

Level 3: Extended Explanation

Explanation Page/Monitor

Use the extended explanation page to offer a more in-depth analysis. You can either direct users to a separate, dedicated application, or provide an additional page within your own app. This will depend on how much control and insight you are willing or able to provide for the technology being used. In most cases, extended explanations have a distinct use case that requires a separate implementation. Since AI analysis is relevant for only very few roles, links to the explanation page (from explanation level 2) must only be offered to users with appropriate authorization. Do not offer the link to regular business users.

Explanation via Conversational UI (SAP CoPilot)

An additional option for providing contextual information is to offer a conversational UI (digital assistant) that can answer a user’s questions about items on the current screen. Ultimately, it should also be possible to ask a digital assistant about the origins of AI results. However, further investigation is required to define how and when to expose AI explanations using a digital assistant.

Behavior and Interaction

When to Display Explanations

Display explanation components only when the AI service is able to provide predictions with reasonable confidence/accuracy. The threshold for acceptable prediction quality must be defined on a case-by-case basis, and depends on the given use case and AI capabilities. Nevertheless, we do not recommend exposing any predictions that have only poor reliability. Unreliable results lead to damaged user trust and hinder adoption of the AI functionality.

How Long is an Explanation Valid?

The validity of an explanation depends on the machine learning cycle. We differentiate two cases based on the explanation lifespan.

Static Explanations

Static explanations are suitable for “offline learning” or instance-based AI services. We assume that the need for a repeated explanation of the model can decrease or even disappear over time as the user gains more experience with the system.

- Once the user understands the main principle of the underlying service (algorithm), consider leaving out explanations at the model level.

- Always display the explanation again if the AI model is changed or updated. This applies to explanations that are hard coded or maintained manually.

Dynamic Explanations

Dynamic explanations are required for “online learning” AI services. This type of explanation is generated (refined) every time the AI has learned and adapted its model. In most cases, it’s not necessary to change the appearance and content of the explanations completely. However, if an AI service discovers new rules to process items in the system, this newly-acquired knowledge must be reflected in its explanations.

- The main AI description adapts to reflect the newly-applied processing principle.

- The list of decision criteria changes whenever objectives/directions change.

Responsiveness

Fully Responsive

The explanation components explanation indicator and explanation popover must be fully responsive and accessible on all devices.

Partially Responsive

The extended explanation is limited to L and XL form factors (desktop, large tablets) due to its complexity and type of use.

Partially Adaptive

Explanation indicators are partially adaptive due to the two possible display styles (default and extended). The default style is the mandatory format. The extended style is optional for cases where decision criteria are available or beneficial for the user. If the information for the extended display is missing, or there is insufficient screen real estate, revert back to the default state.

For more information about responsiveness, see Multi-Device Support.

Top Tips

Before you start with your design, conduct research to figure out the answers to the questions below.

If extensive research is not an option, use a heuristic evaluation method to test your design. This should be the minimum investment.

- Does the user expect an explanation?

Background: If the risks associated with an action are quite low and the results can easily be rolled back, users are not normally interested in an explanation of the system proposal. - What level of automation are you aiming for?

Background: Depending on the automation level, the use case and target user roles and app functionality can change dramatically. - Which other roles are involved in the experience besides your target business user?

Background: The interaction with AI systems also involves technical, non-business roles. Also consider roles involved in development, support, or maintenance. - How transparent or traceable does your machine learning service have to be?

Background: Some use cases are more critical than others. Due to legal and regulatory provisions, enterprise applications are often subject to stricter auditing requirements than consumer software. - Are your users generally able to relate to the displayed information and data, and can they infer the follow-on actions and impact (detail and explicitness)?

Background: Although AI can help optimize even simpler tasks, it might be overengineered to provide AI explanations when the user fully understands the results and implications. - How harmful can wrongly processed data be for the business of your client?

Background: Some actions in processes are critical by design and can have serious side effects. AI explanations must safeguard such scenarios and help prevent any damage or disruption. - How easy or difficult is it to revert changes applied to the system or process?

Background: In scenarios where the user faces deadlines, period-end closing, or other actions that must succeed first time, providing information to support the user is crucial. However, may not need explanations if it is possible to just revert everything instantly in case of failure. - Does your business case require continuous adaptation?

Background: We assume that the need for a repeated (static) explanation of the model can decrease or even disappear over time as the user gains more experience. However, if the AI learns dynamically, users must always be informed of changing conditions (dynamic explanations).

Your feedback has been sent to the SAP Fiori design team.

Your feedback has been sent to the SAP Fiori design team.