Design for Multi-modality

There are a few things to keep in mind when you’re designing multi-modal interactions:

- Know your users

- What are the modality characteristics?

- How to get started designing multi-modal interactions?

Know Your Users

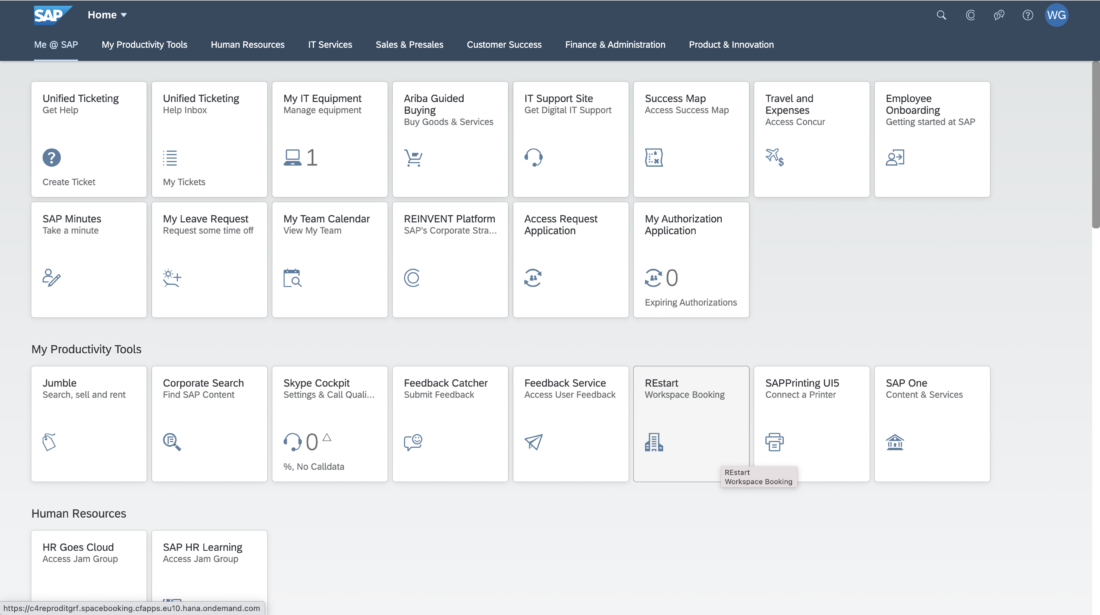

Get to know your users before you create a digital assistants and select an interaction method. As the designer, you should understand user goals, preferences, and behaviors. In a multi-modal design, a problem can have various solutions and interaction methods. Showing users their options lets them choose their preferred path in the current context.

Who and Where Are Your Users?

When you define a user persona, you create a user profile that includes age, job title, job responsibilities, goals, pain points, needs, and location. It’s important to know how users interact with the digital assistant on their devices. Where are they? Are they in the car, in the office, at the airport, cliff diving? Can they type or user voice commands? User locations determine what type of interaction they have with your digital assistant.

In the Car

In the car, the users hands are busy driving, their eyes are watching the road, their voice and ears are available to interact. In the car, voice interface is best (since the user is a bit busy driving!).

In the Office

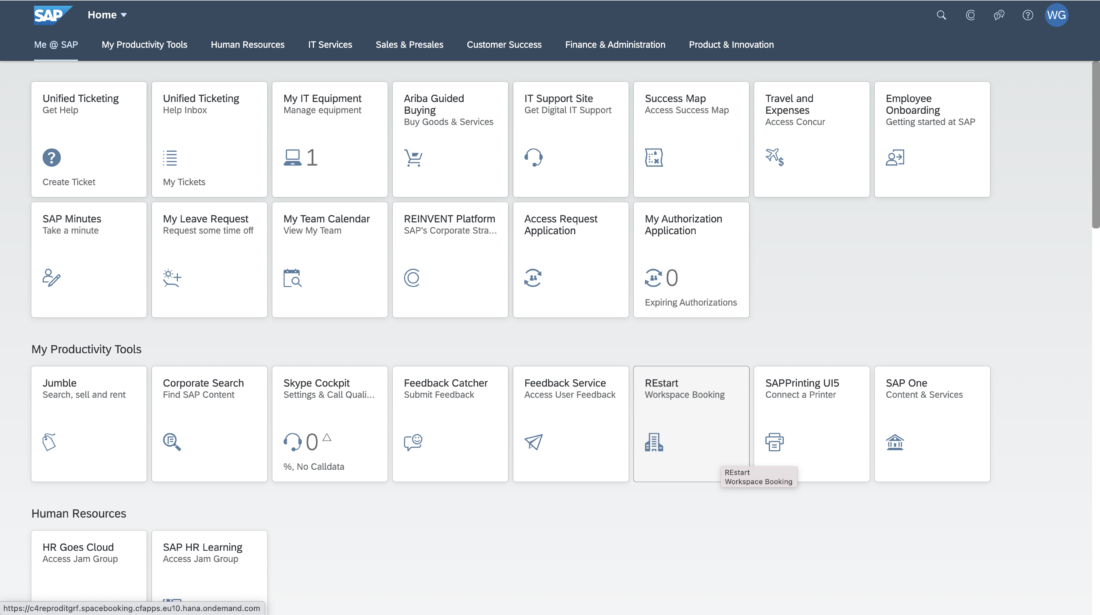

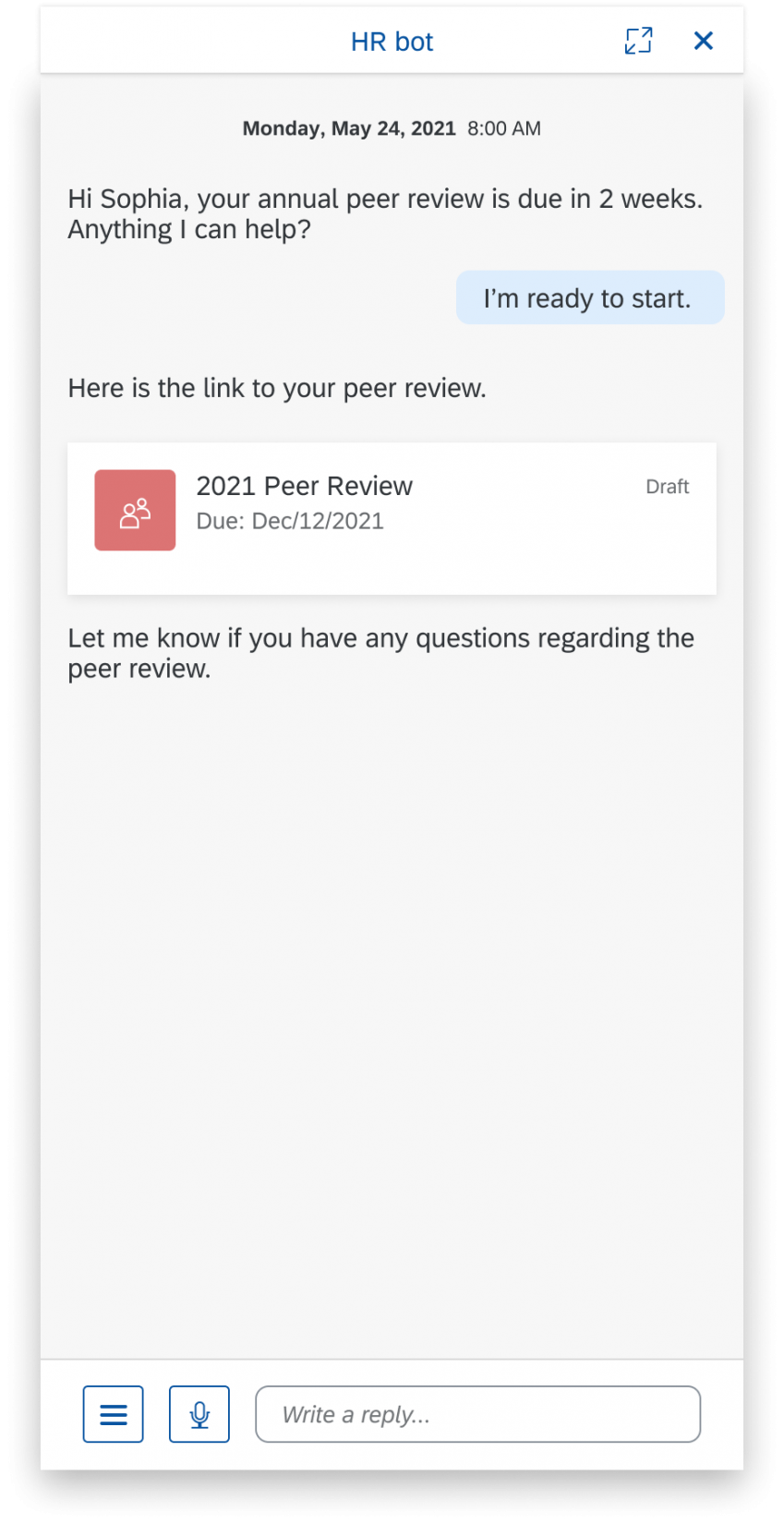

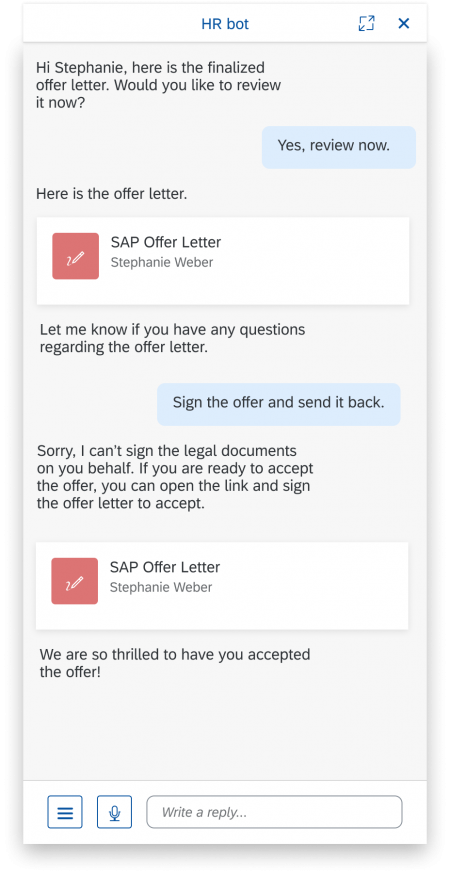

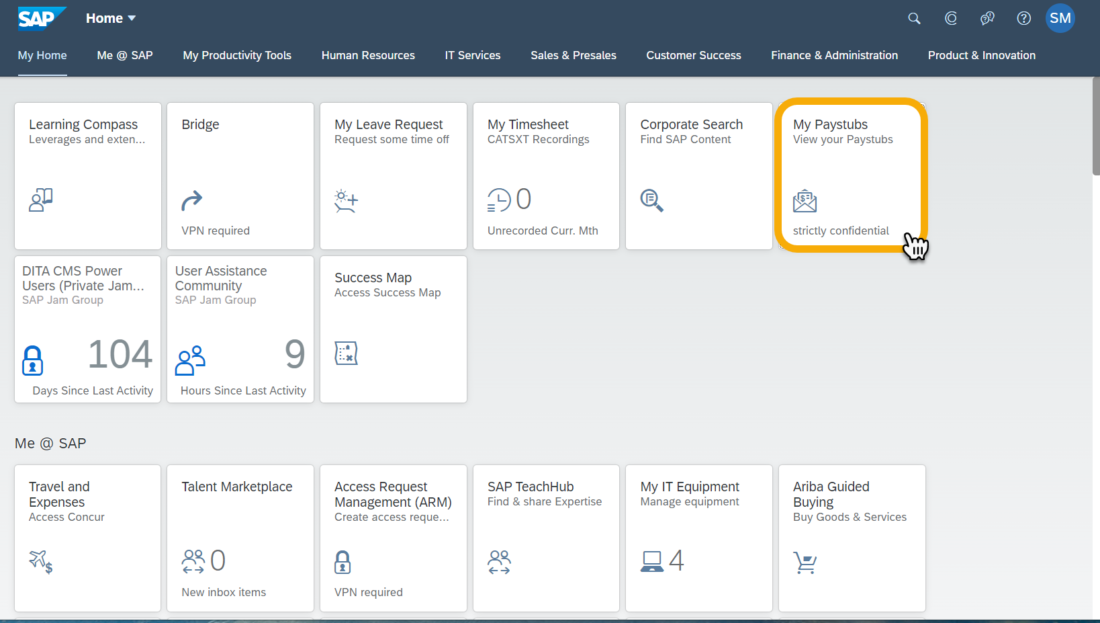

In the office, the user usually has their hands, eyes, and ears are free, but their voice might be restricted, depending on if they have coworkers sitting nearby. In the office, conversational interface is the user’s best bet, depending on the context and type of information.

Interaction Modalities

Depending on the situation, one interaction modality might be better suited than another. The digital assistant can respond by using various interaction modalities, such as voice, text, graphically, and more. Let’s see how various scenarios call for different interaction modalities.

Voice Interface

The user verbally asks the digital assistant a question. The digital assistant responds verbally to the user. It’s a simple question and response.

User speaks: “When is the next sales kick-off?”

Digital assistant speaks: “The next sales kick-off is from March 10-18 in Las Vegas, Nevada.”

Voice Interface

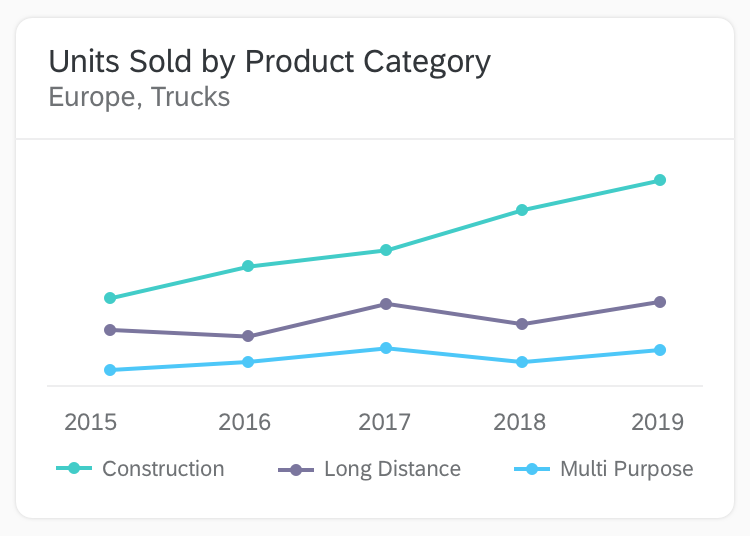

User speaks: “What are the total team sales for last quarter?”

Digital assistant speaks: “The total team sales went up by 15% compared to first quarter, but was down by 10% compared to the same quarter last year. Here’s the breakdown…”

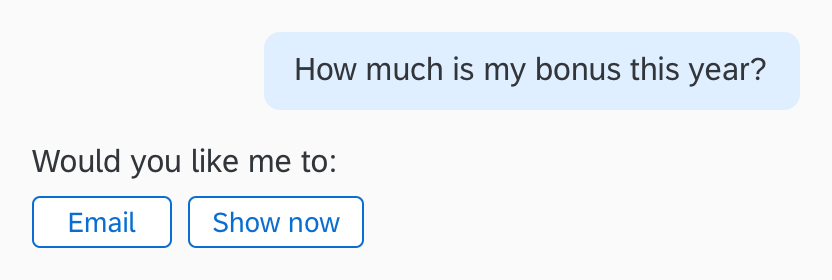

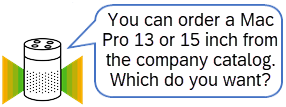

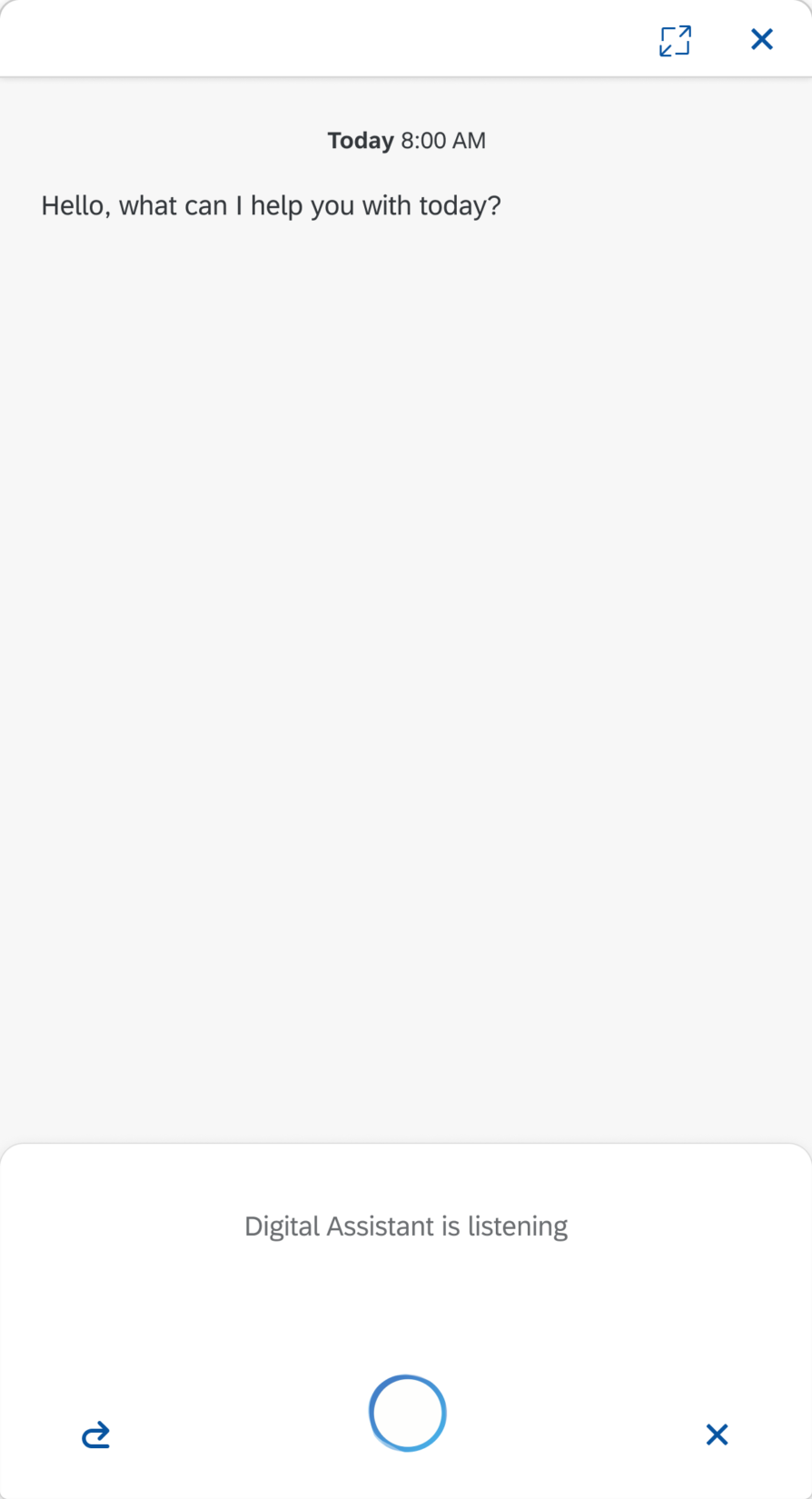

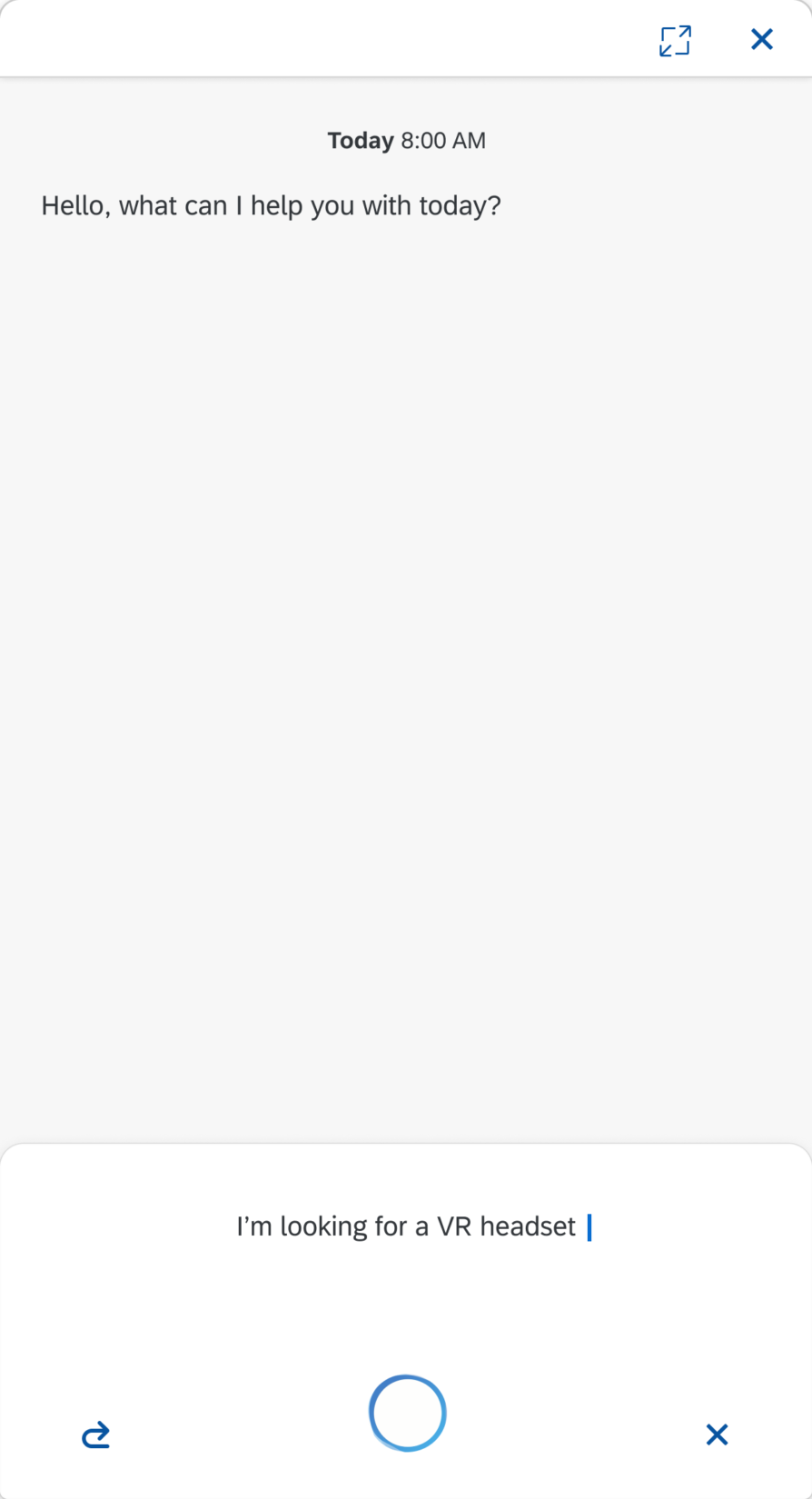

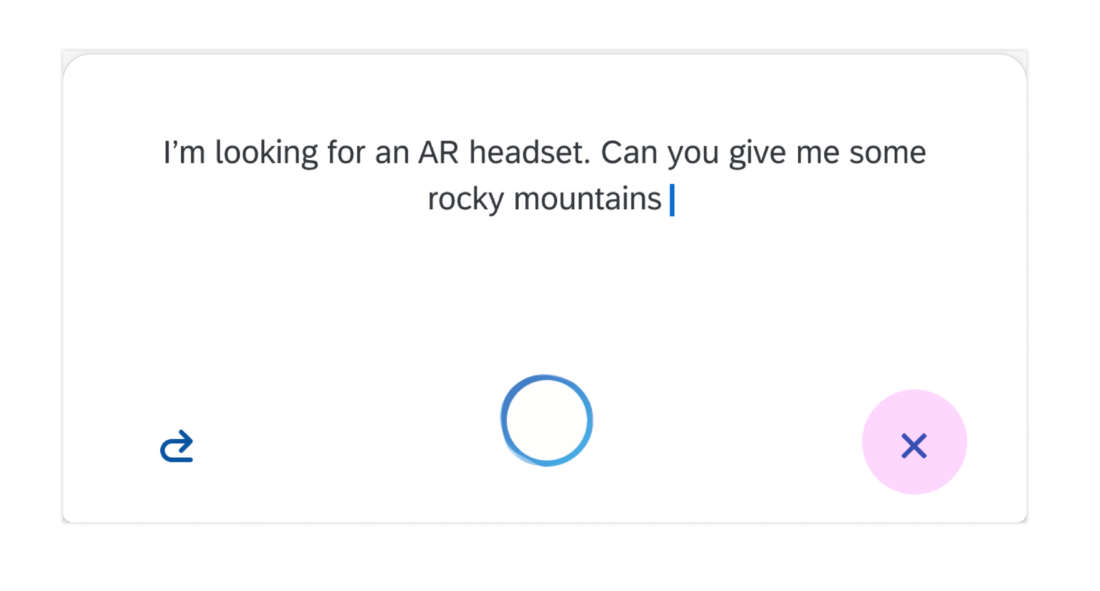

User Input

Users can input a request for a digital assistant to get information in a variety of ways, including voice commands and conversational text. Depending on the user input in a scenario, the digital assistant uses this input to match a correct intent and give the user the best answer in the best way.

Voice Interface

Users simply tell the digital assistant what they need.

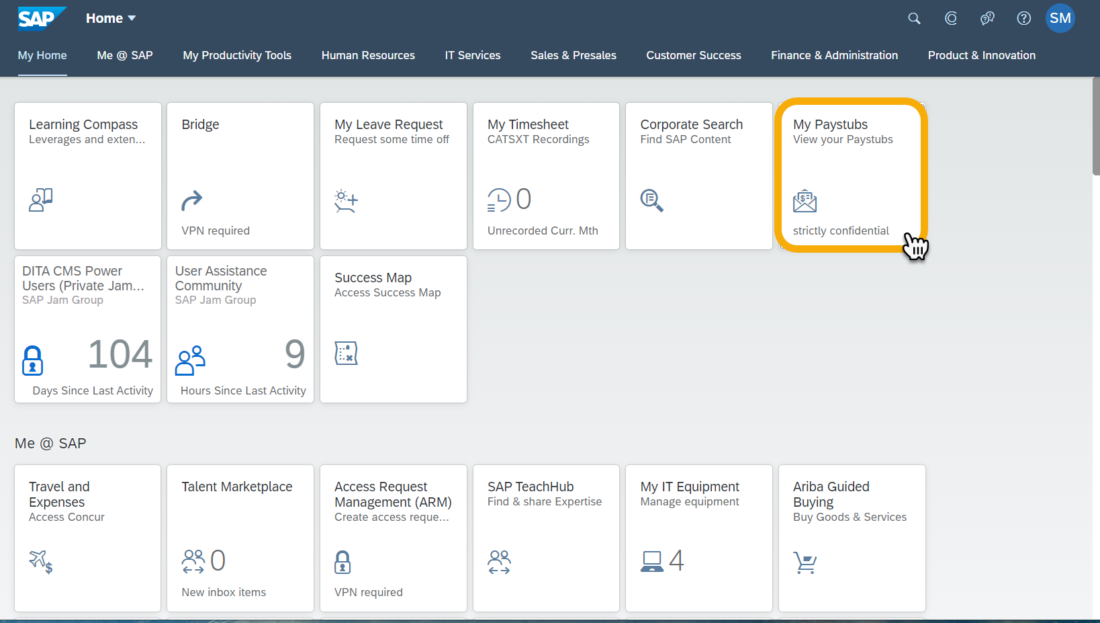

User speaks: “Create a leave request for September 26.”

Digital assistant speaks: “I created a leave request for you for September 26.”

Digital Assistant Output

The digital assistant can respond (output) to a user request using voice, text, and graphically. The context and type of information determines the digital assistant’s output type.

Voice Interface

Use voice interface for:

- Small bites of information: meeting time, weather, vacation days

- Listing up to 3 items

User speaks: “Hey, what time is my sales meeting today?”

Digital assistant speaks: “Your sales meeting is at 3:00pm today.”

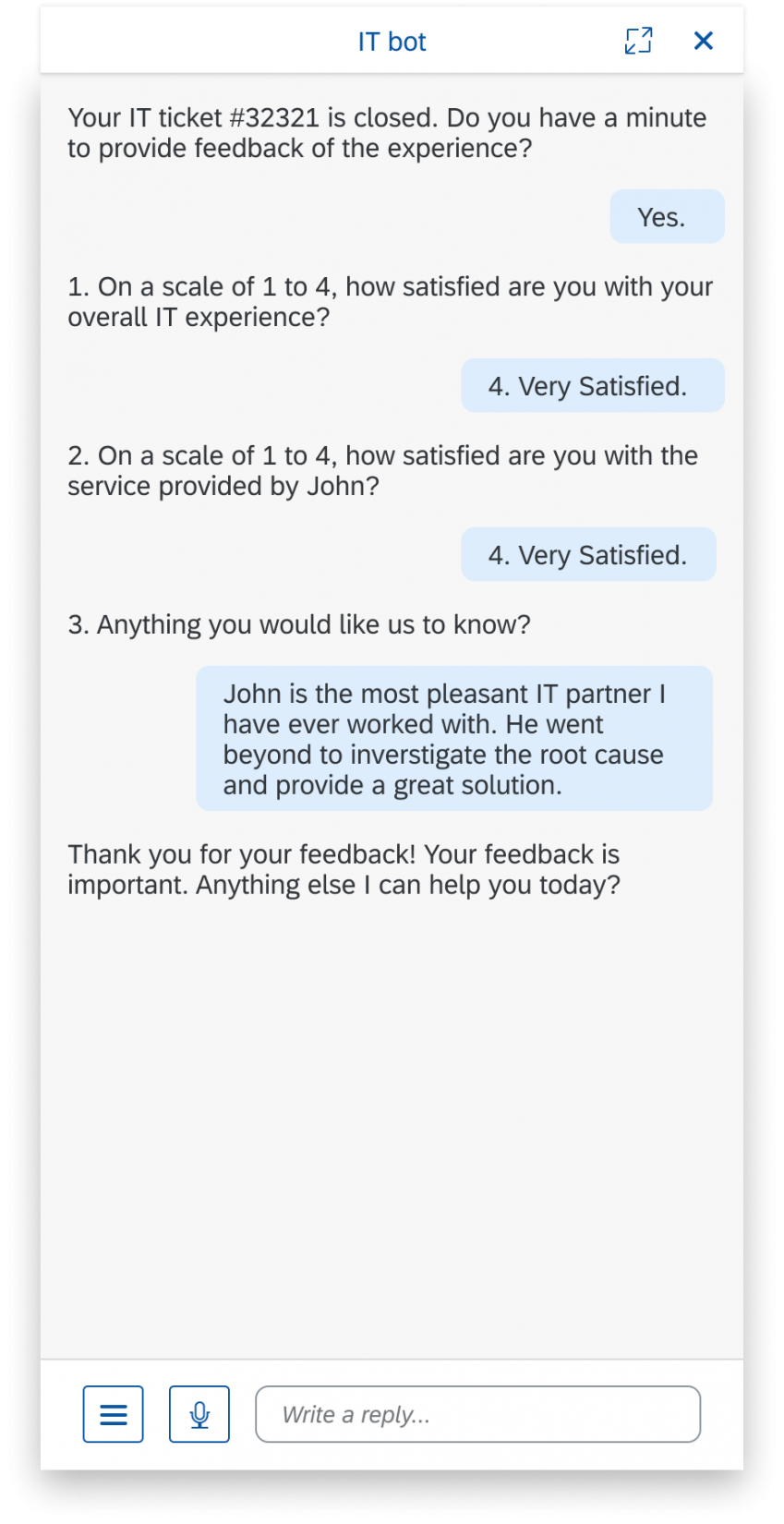

Discoverability

This type of response can help users explore and learn by discovering new things. The digital assistant can tell users how to do something or show them graphically how to do it. Some contexts require a more visual approach.

User Focus

User focus indicates whether or not the user interface requires the user’s full attention.

Voice Interface

Doesn’t require full attention (maybe because they are baking), which allows the user to multitask.

User speaks: “How many ounces in a cup?”

Digital assistant speaks: “There are 8 ounces in one cup.”

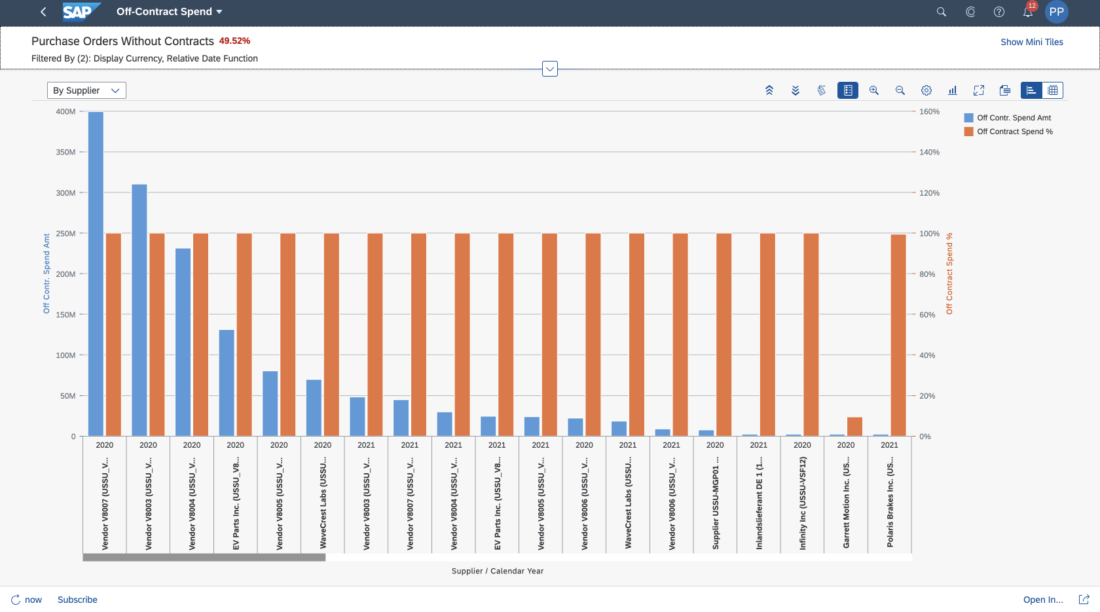

Graphical Interface

Requires most of the user’s attention (like a serious task).

User speaks: “How do I remove an appendix?” (don’t worry, this is a med student!)

Digital assistant speaks: “Let me show you a video of an appendectomy.”

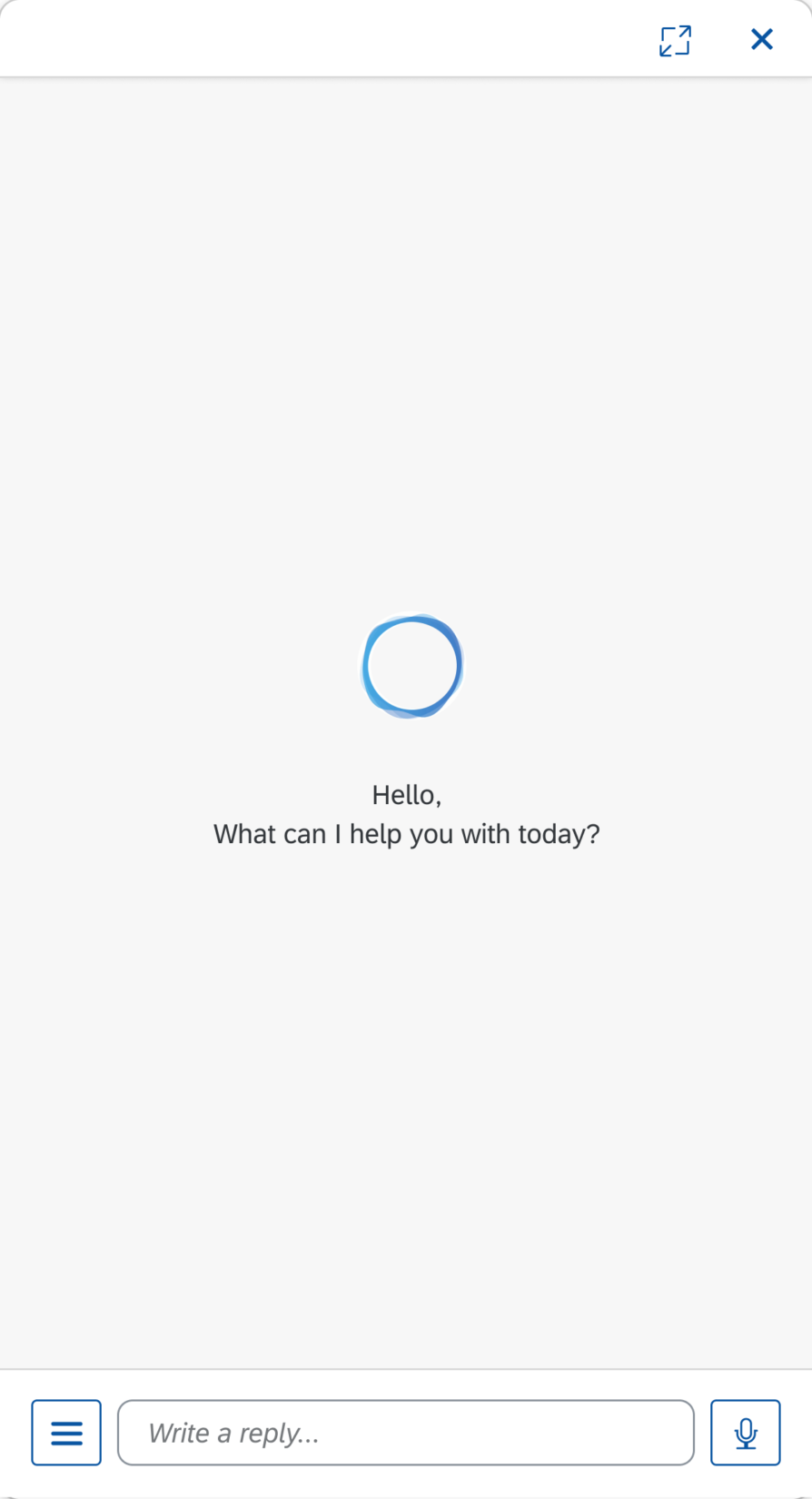

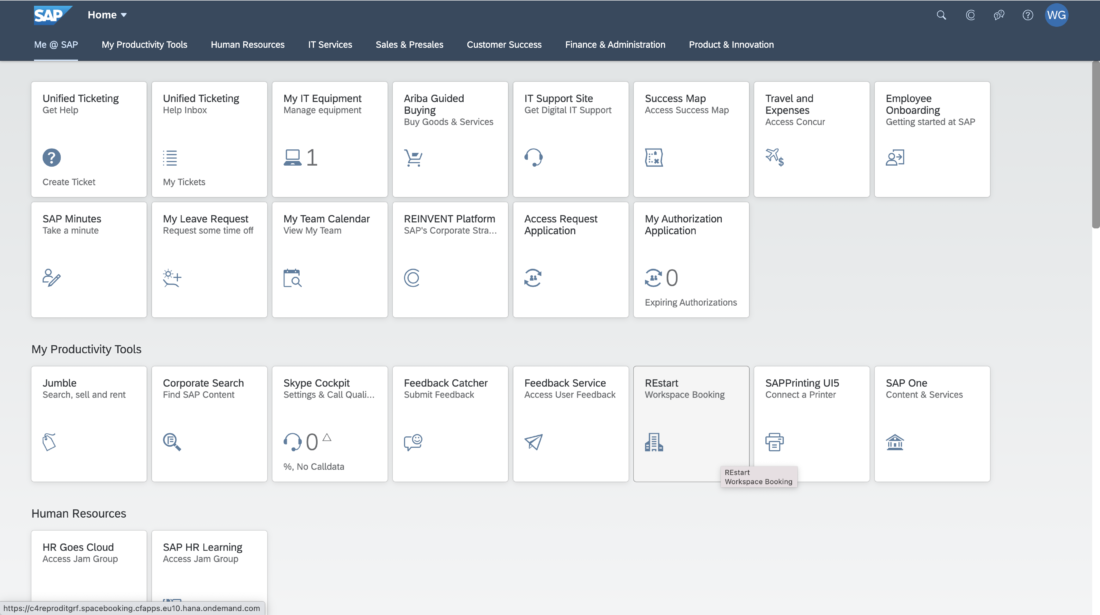

Get Started

When you are ready to get started creating your cool new digital assistant, keep a few things in mind.

- Start with one digital assistant interaction type. Then introduce other interaction methods to supplement it or to offset the digital assistant’s limitations. This ensures that you are actually designing a conversational product and not just a graphical interface with voice added.

- Conversational products can provide a multi-sensory experience, but it is not always necessary. Review the principles of calm technology to design effective, yet subtle interactions.

- Explore new use cases and opportunities to interact with users. User research is key to successful conversational interactions.

Get moving on your new digital assistant. Ready, set go!