User Feedback

In the context of intelligent systems, “user feedback” refers to the process of collecting, interpreting, and integrating user feedback into the system to improve the intelligence and the results.

Feedback is always triggered by the user. The feedback is based on the user’s judgement and experiences while reviewing and responding to the AI output.

Collecting data for further manual analysis for other purposes (such as marketing or usability research) is out of scope.

When to Use

Collect user feedback on AI output if you want to enable dynamic learning behavior to enhance the intelligence of your product.

Prerequisites

- You have implemented a retraining infrastructure.

- There is sufficient data to run instant or periodic model training.

User Consent

Some user feedback types can be legally sensitive and require user consent. When applying user feedback elements to your product, validate your use case with users, customers, and legal team. Local data protection regulations may also put constraints on how user feedback is processed.

Feedback Collection

There are four main aspects to consider when deciding how to collect feedback:

- Feedback collection method

- Feedback collection context

- Type of user input

- Feedback interaction method

The sections below look at each aspect in more detail.

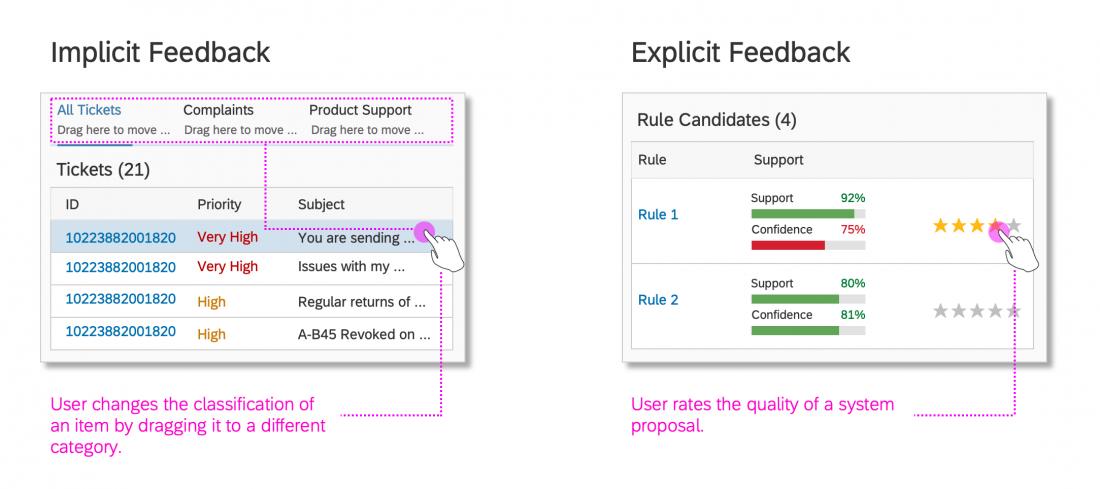

Implicit Feedback

Implicit feedback is collected by tracking the user’s activities, including navigation paths, search queries, inputs, and other interactions. This methodology gathers feedback while the user is interacting with the system, and is non-disruptive.

Implicit methods serve most collection needs.

Explicit Feedback

Explicit feedback is given when the users interrupt their regular course of action to give feedback, or when the system interrupts the user to ask for feedback. Use explicit feedback methods judiciously, and only if implicit methods aren’t suitable.

Explicit methods serve special collection needs.

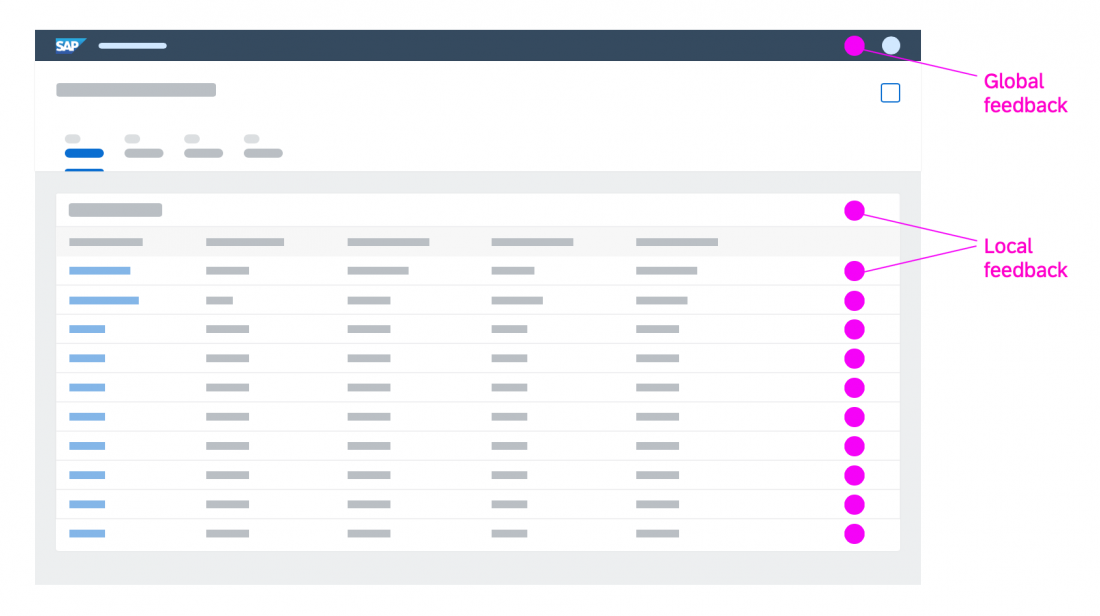

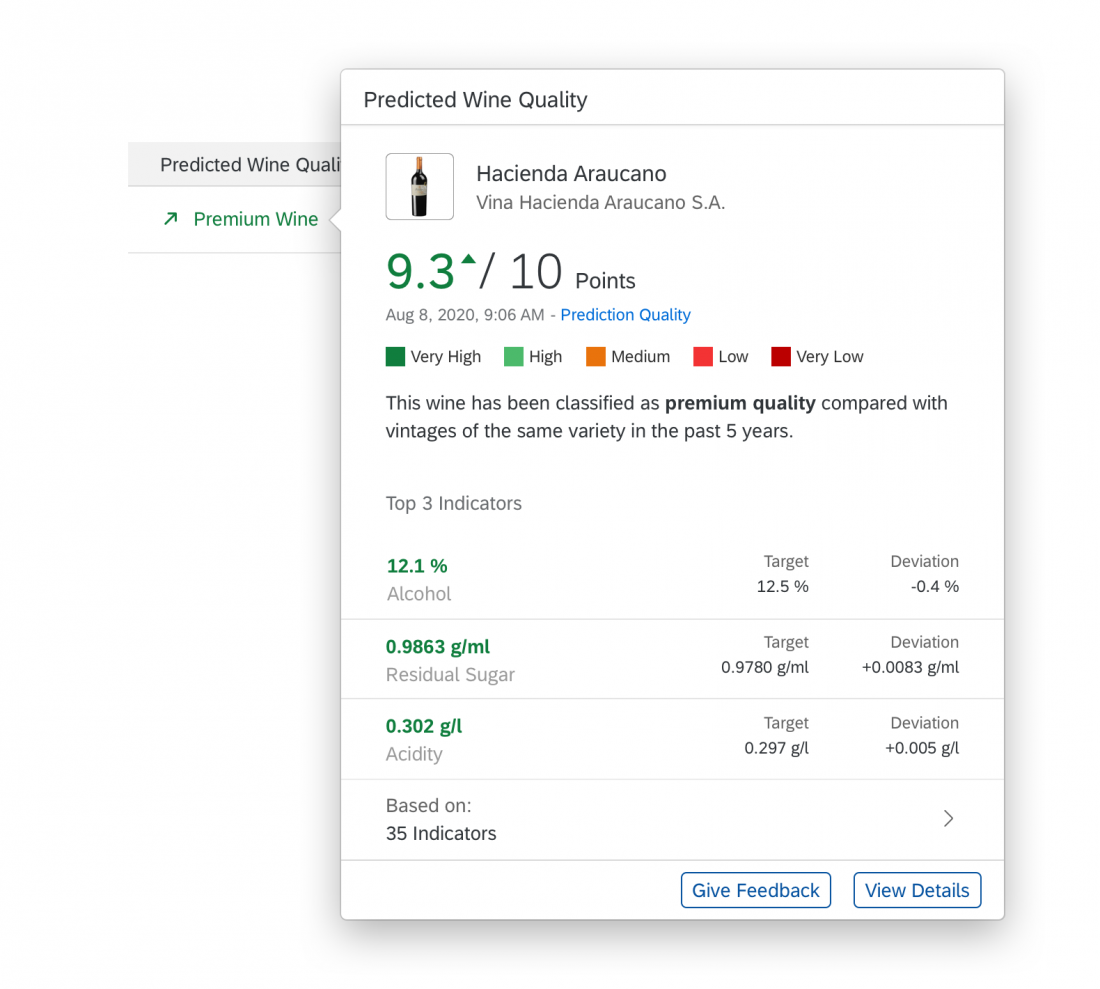

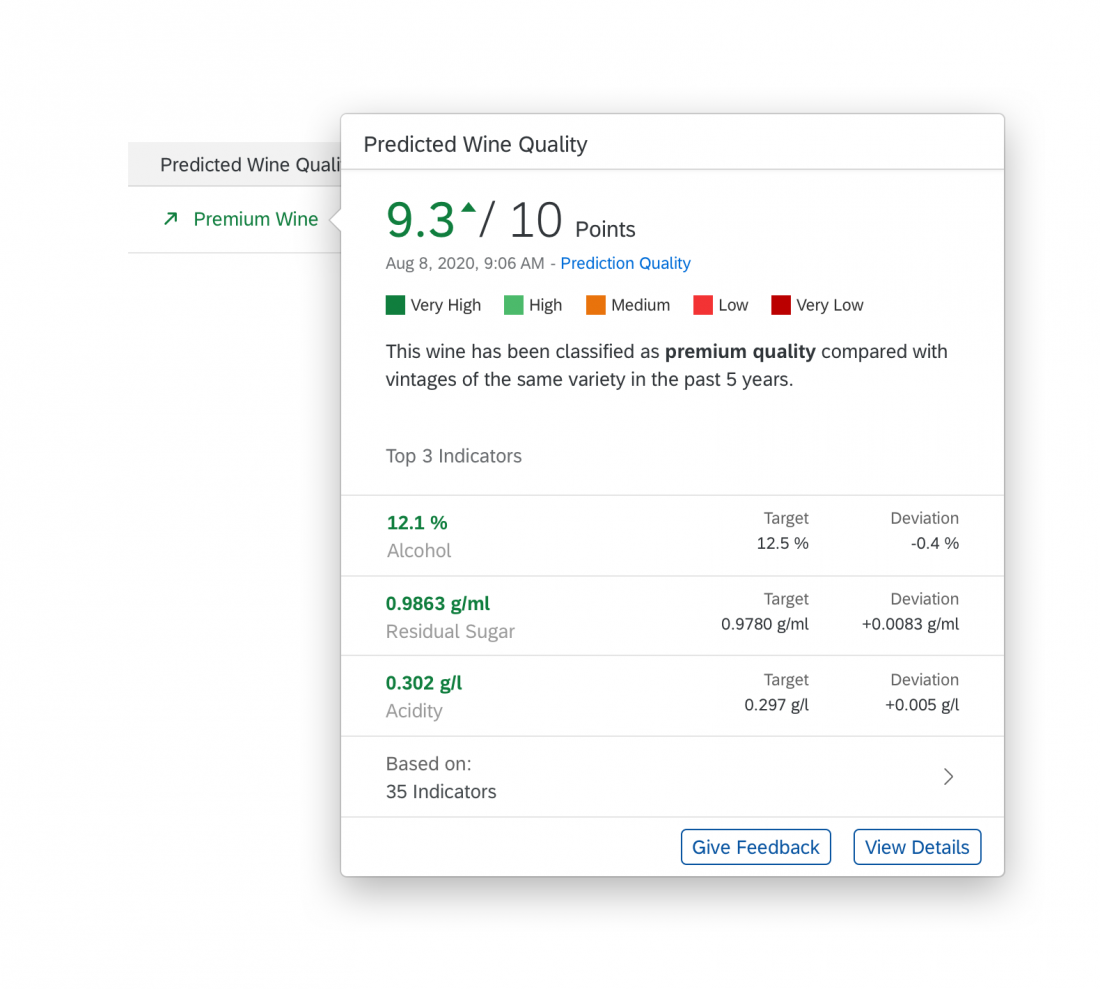

Characteristics of Local Feedback

- The feedback trigger is close to the UI element and contextual information.

- Users can give direct feedback on a specific component or value.

- Local feedback is clearly focused, which makes it easier to interpret the feedback afterwards.

Characteristics of Global Feedback

- The feedback applies for an entire page, or even an entire app.

- The focus is not limited to a specific component or value.

- The feedback is collected with the intent to improve the overall ML model, not a single inference.

Types of User Input

To choose the appropriate input control, think about different ways of asking for the feedback and how well you will be able to utilize the insights. The method and format determine the quality of the feedback.

We differentiate between structured user input and unstructured user input.

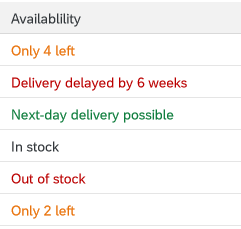

Structured Input

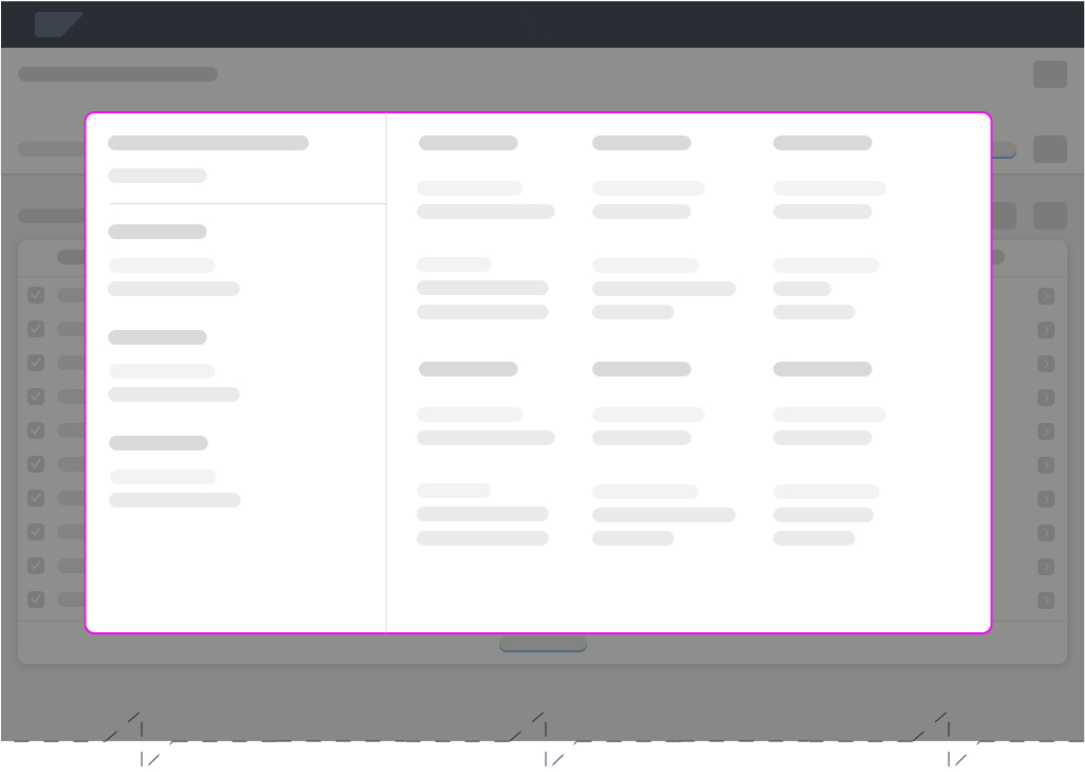

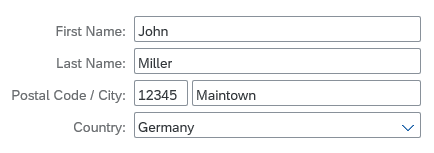

You can collect very specific feedback by asking a closed question with a given set of options. The user can answer only in the predefined format and can’t give feedback freely.

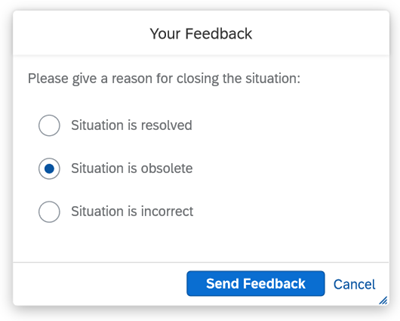

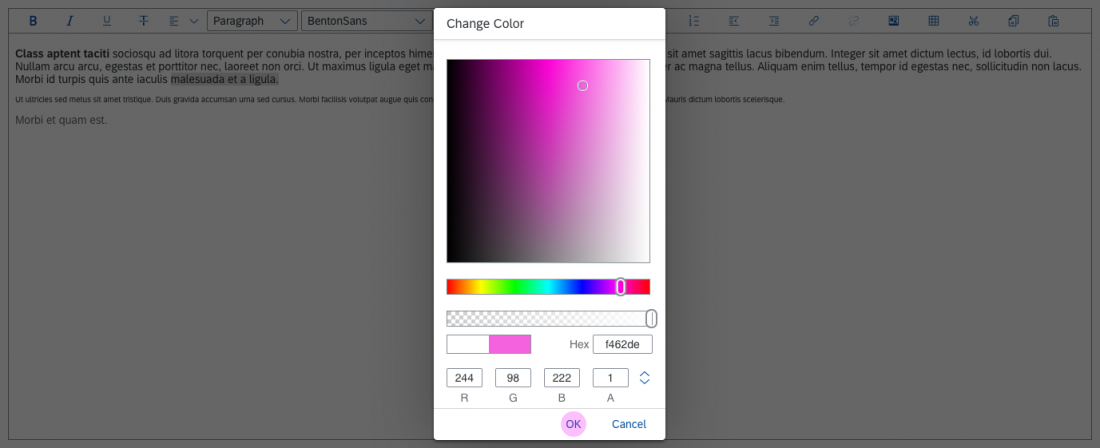

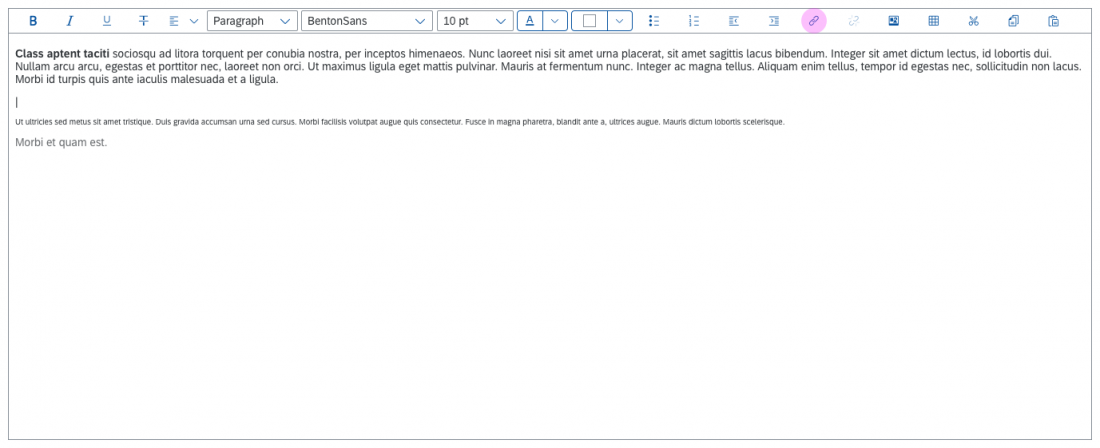

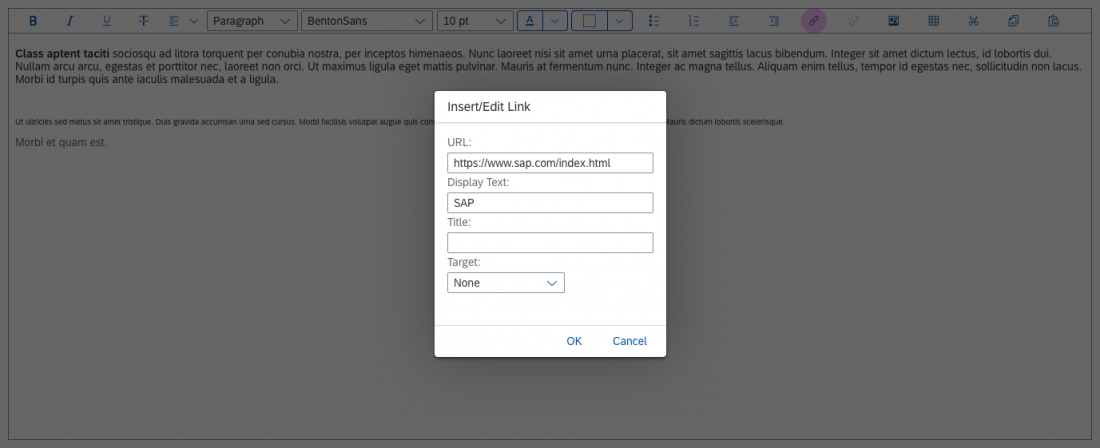

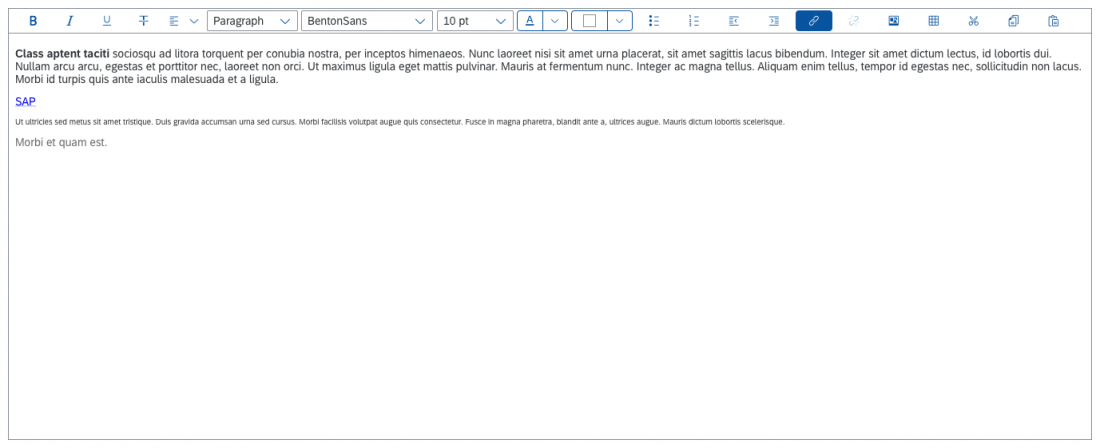

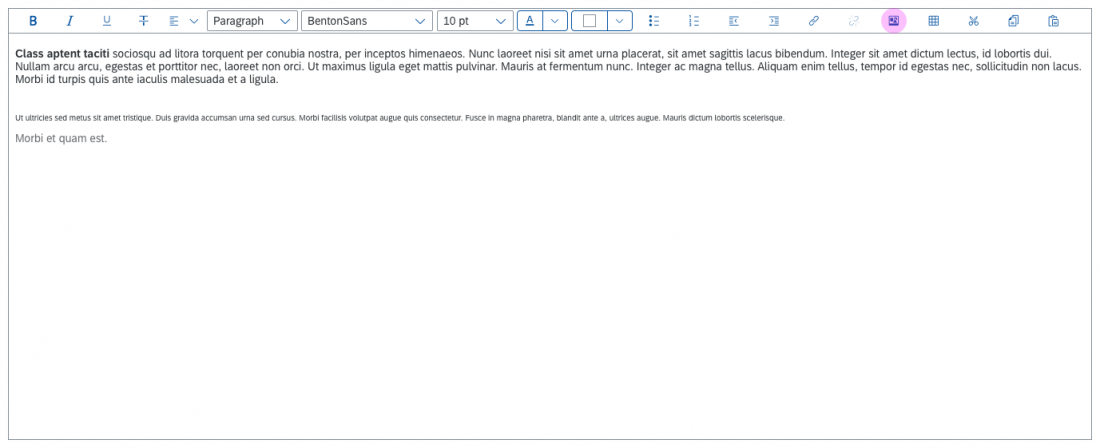

Example of structured input

Characteristics of structured feedback:

- Questions have a clear focus.

- Questions can be answered with “yes” or “no”.

- The feedback collected usually relates to the quality of recommendations.

Unstructured Input

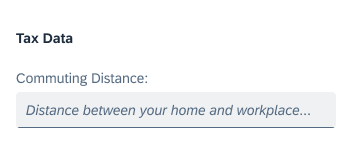

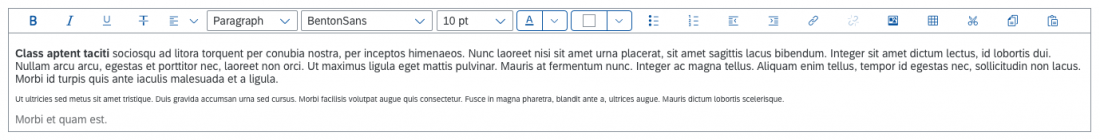

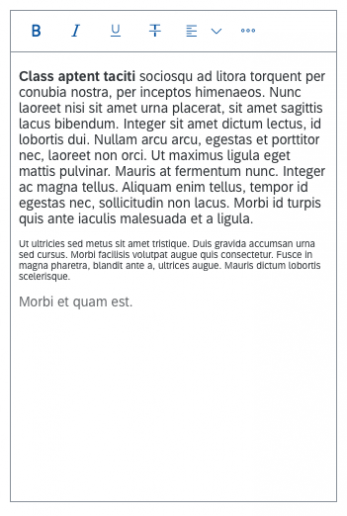

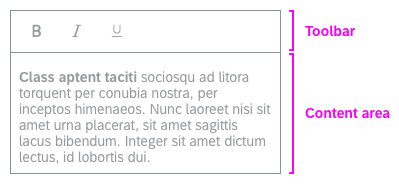

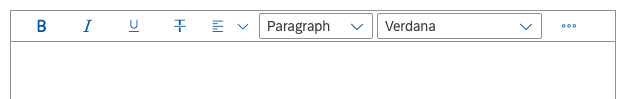

You also can collect unrestricted feedback by asking open questions. Here, the user can provide complex answers and include feedback on aspects you hadn’t thought of. This allows you to collect insights on external real-world factors that aren’t part of your system.

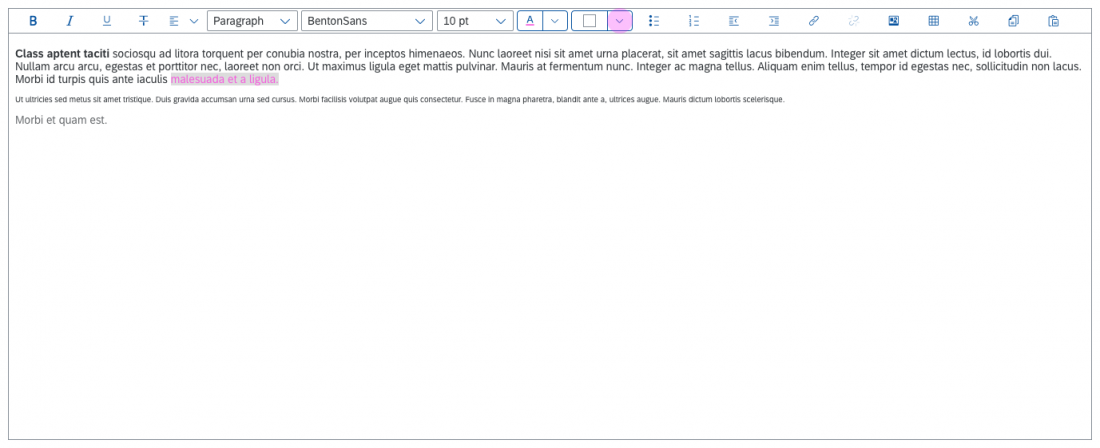

Example of unstructured input

Characteristics of unstructured feedback:

- Questions are formulated openly, inviting unlimited answers.

- Feedback is collected to gain information on the quality and performance of intelligence services.

- Feedback is collected to understand user behavior on the UI while performing a task.

- Feedback processing takes time and needs detailed investigation to be interpreted accurately.

Feedback Interaction Methods

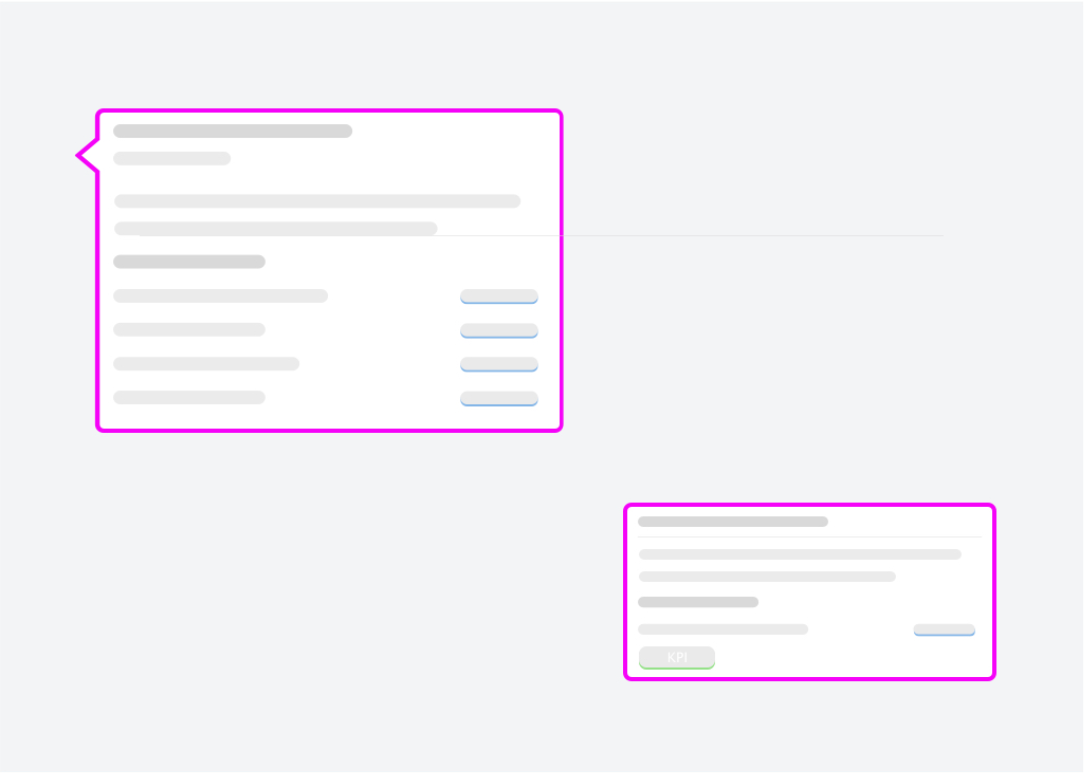

The interaction method for collecting explicit feedback depends on the use case and the user’s motivation. We differentiate between active users and passive users:

Interaction Methods for Active Users

For active users, offer an optional feedback trigger on the UI so that users can give feedback whenever they want.

Interaction Methods for Passive Users

For passive users, prompt the user to provide feedback using a feedback form or notification. With this approach, the system interrupts the user’s workflow to ask for feedback.

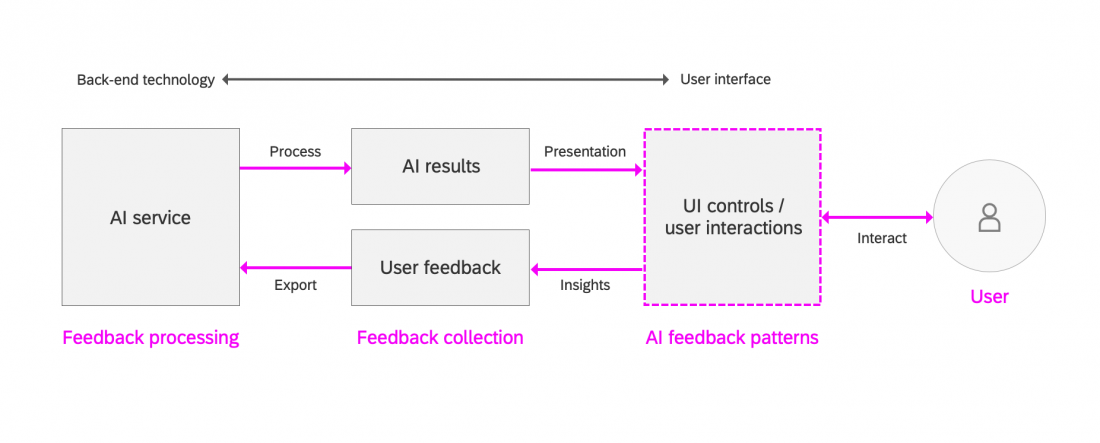

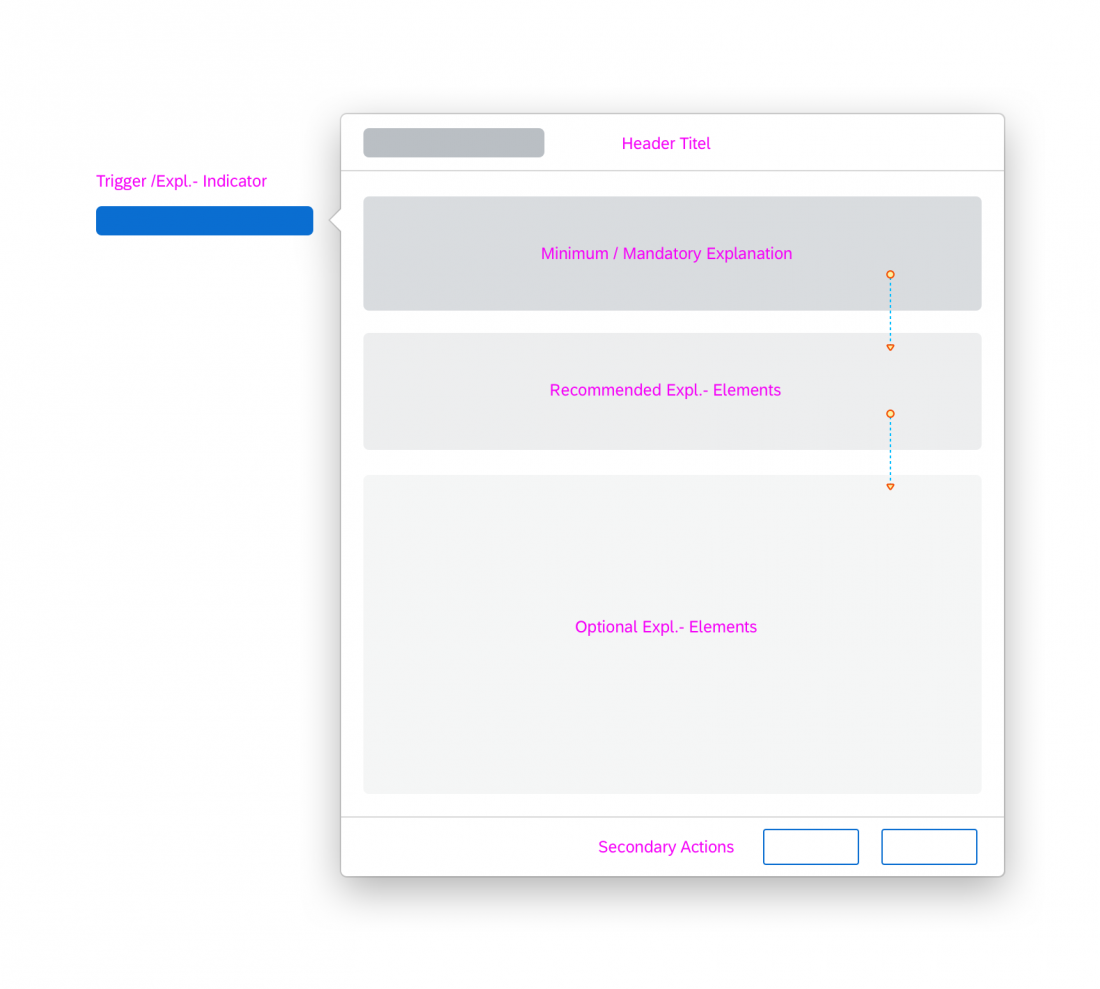

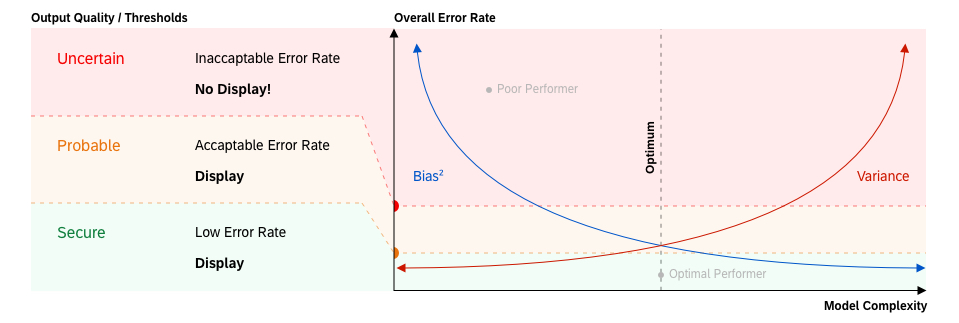

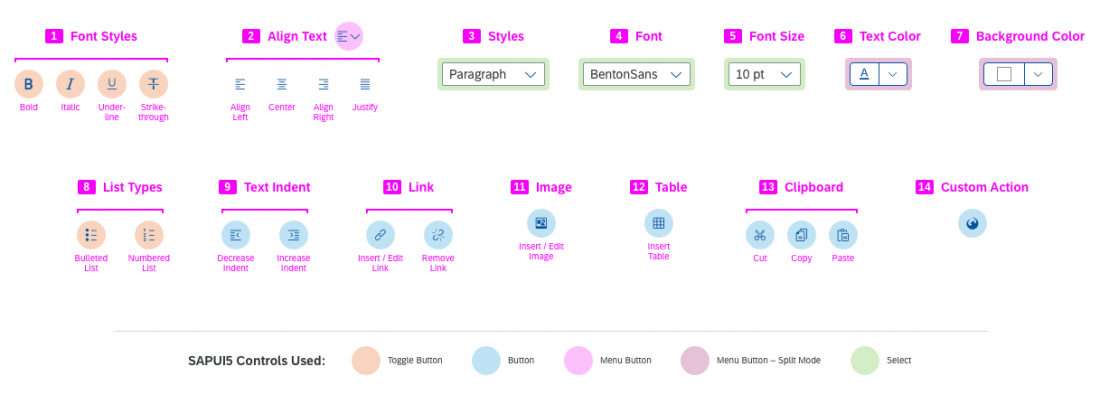

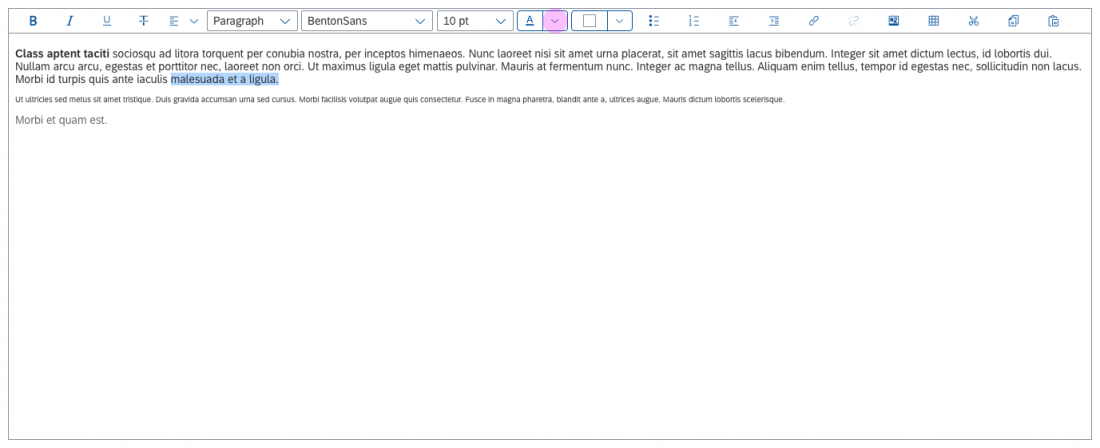

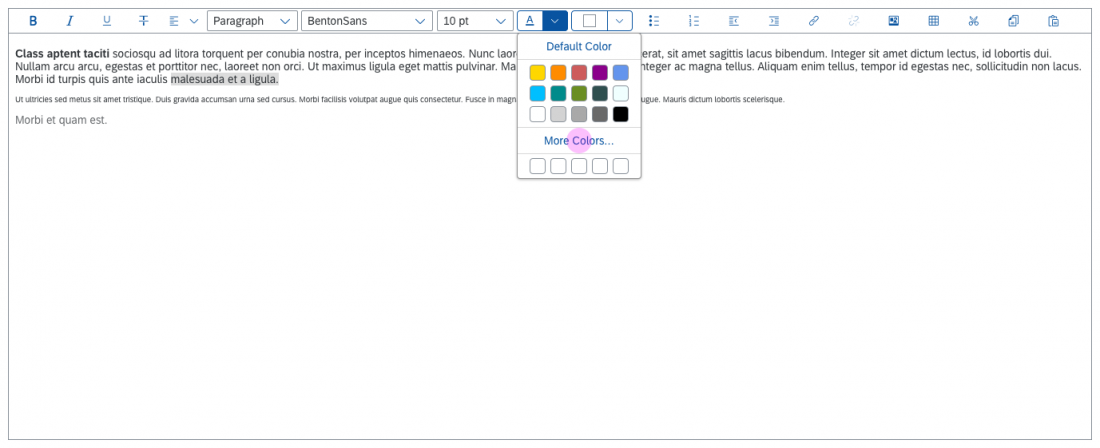

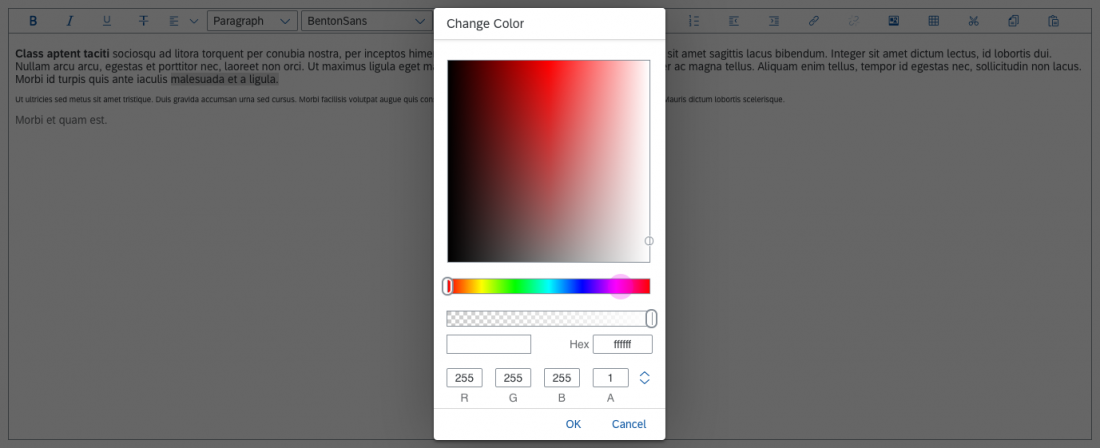

AI Feedback Patterns

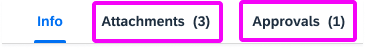

An AI feedback pattern is a set of recommended UI controls that can be used to collect user feedback implicitly or explicitly to improve the AI output. The purpose of an AI feedback pattern is to help the designer and the product team understand the use case requirements and choose suitable UI interactions for the task.

The feedback patterns have been carefully designed to support different use cases. The recommended UI controls need to be implemented at app level to collect the user feedback and deliver it back to the AI service.

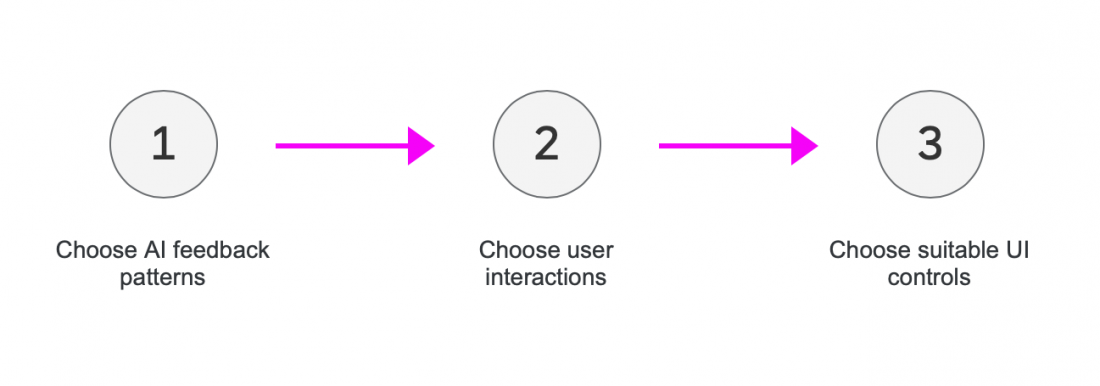

Steps for Implementing AI Feedback Patterns

- Choose the AI feedback pattern for your AI implementation.

- Narrow down the required user interactions.

- Incorporate the feedback pattern using the appropriate UI controls.

Pattern Types

So far, we have identified three AI feedback pattern types:

- Confirm or correct: The user confirms or corrects the solution provided by the AI.

- Track user actions: The system tracks user actions performed on the AI results.

- Measure observable behavior: The system measures the observable behavior of a user experiencing AI results.

Confirm or Correct

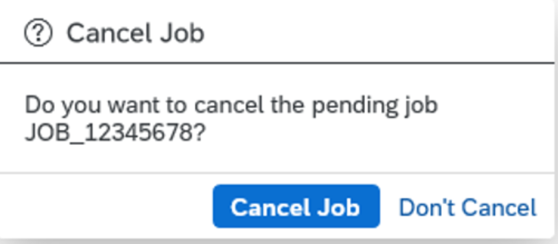

Users can actively give structured feedback on the AI output to help the system learn and improve its intelligence over time. Examples of this type of feedback include confirming and correcting the AI results. We distinguish between binary and non-binary feedback collection methods.

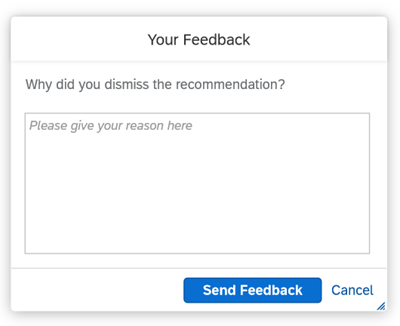

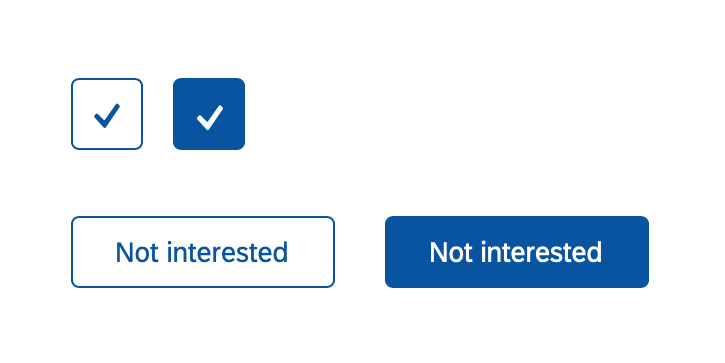

Binary feedback collection

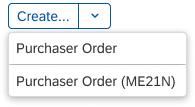

Examples of simple binary feedback collection methods are action buttons, toggle buttons, or toggle controls like checkboxes (selected, deselected).

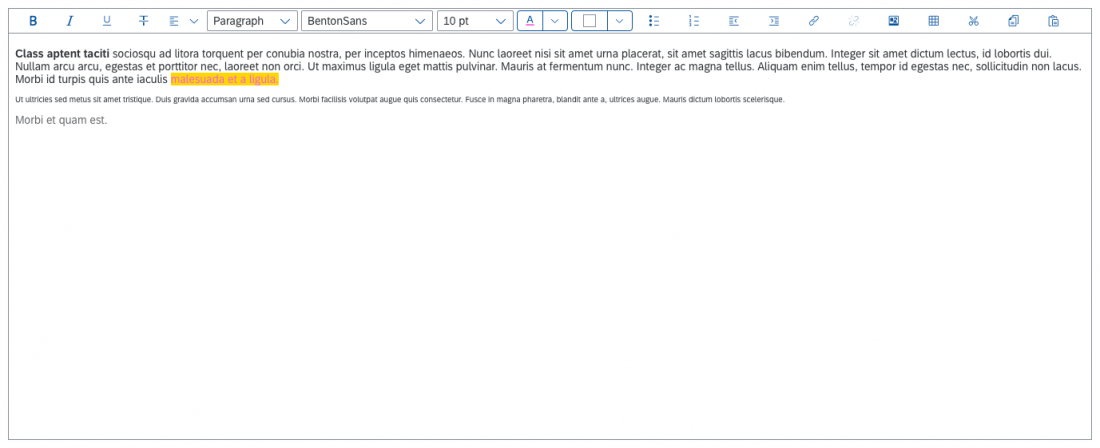

Toggle buttons for binary feedback collection

Primary action buttons for binary feedback collection

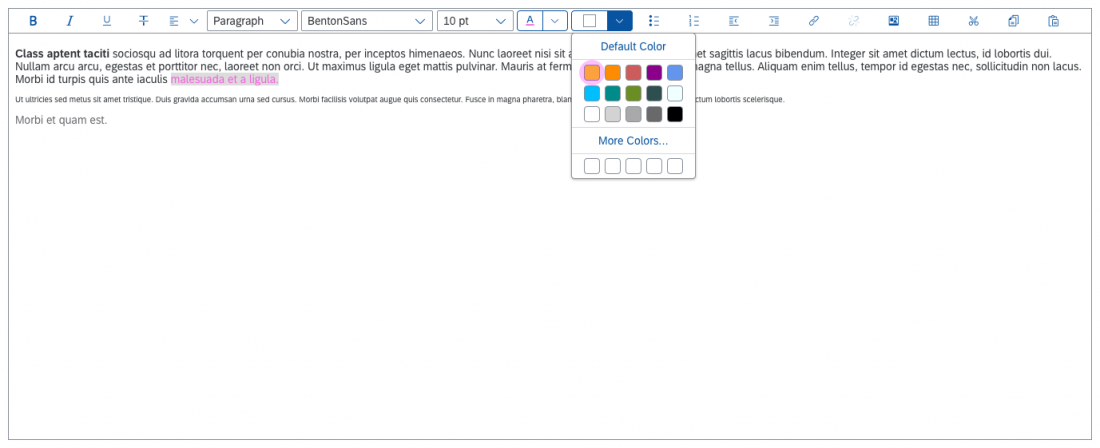

Non-binary feedback collection

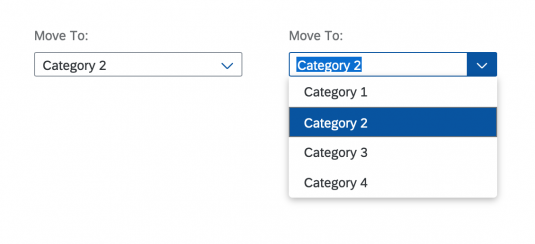

In non-binary feedback collection methods, multiple selection categories are offered. Possible controls include combo boxes or a combination of list selection and radio buttons.

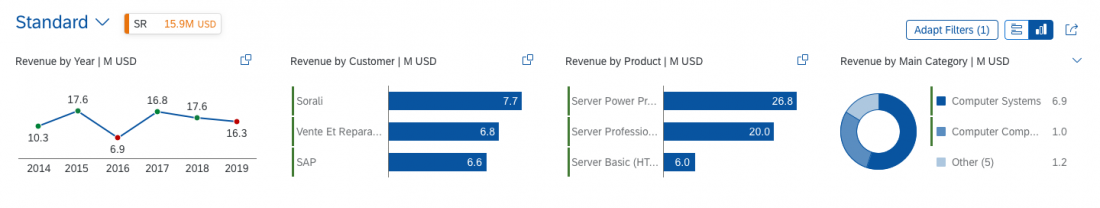

Track User Actions

Intelligent systems can also learn from the UI actions users perform on the AI results. These actions include:

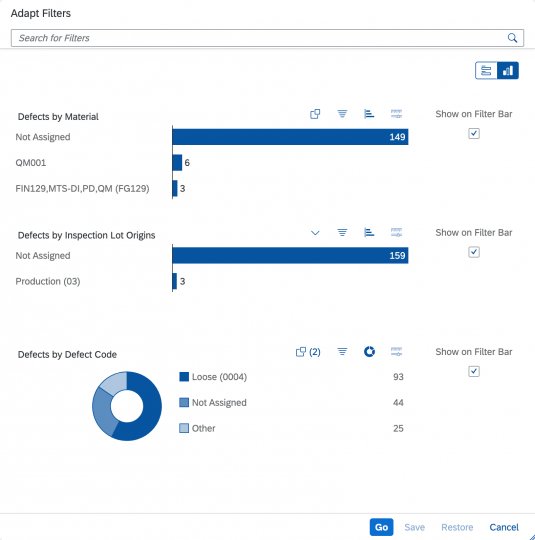

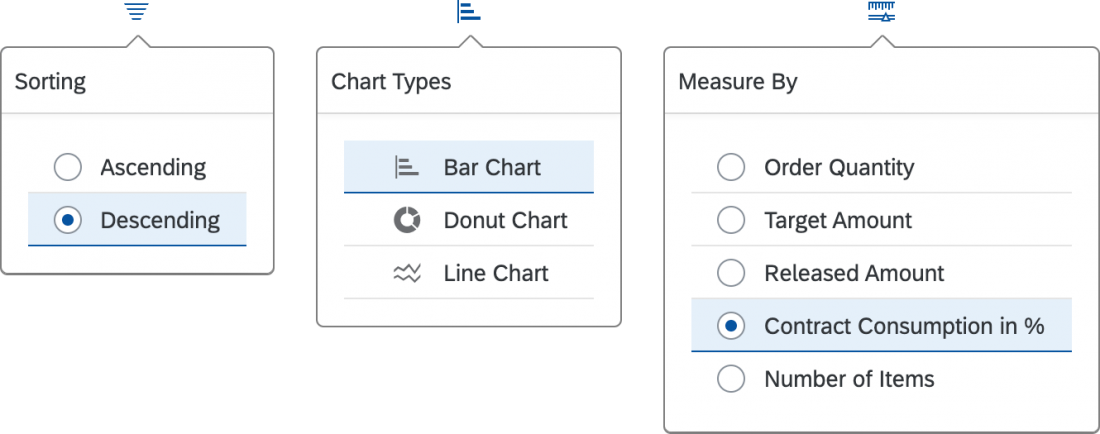

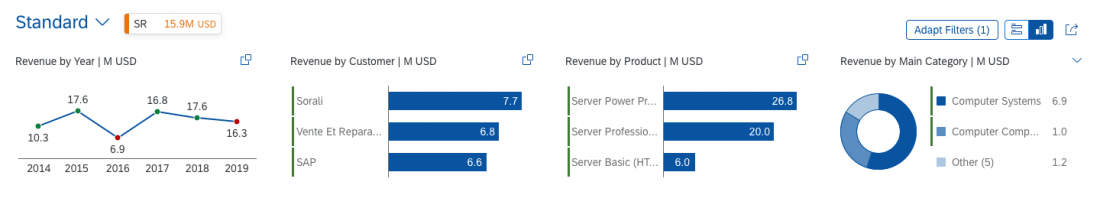

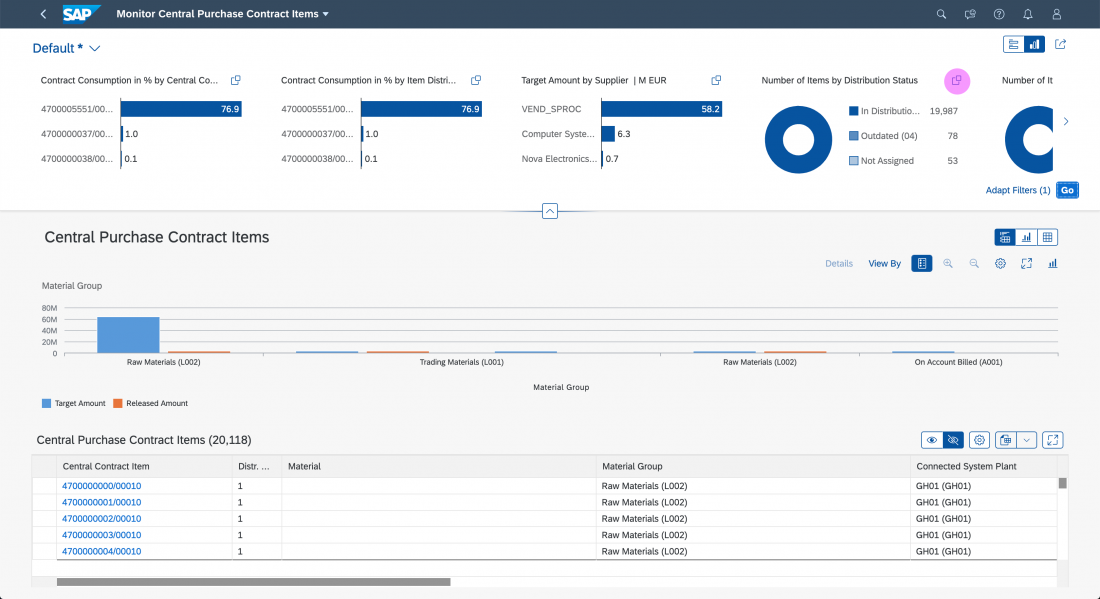

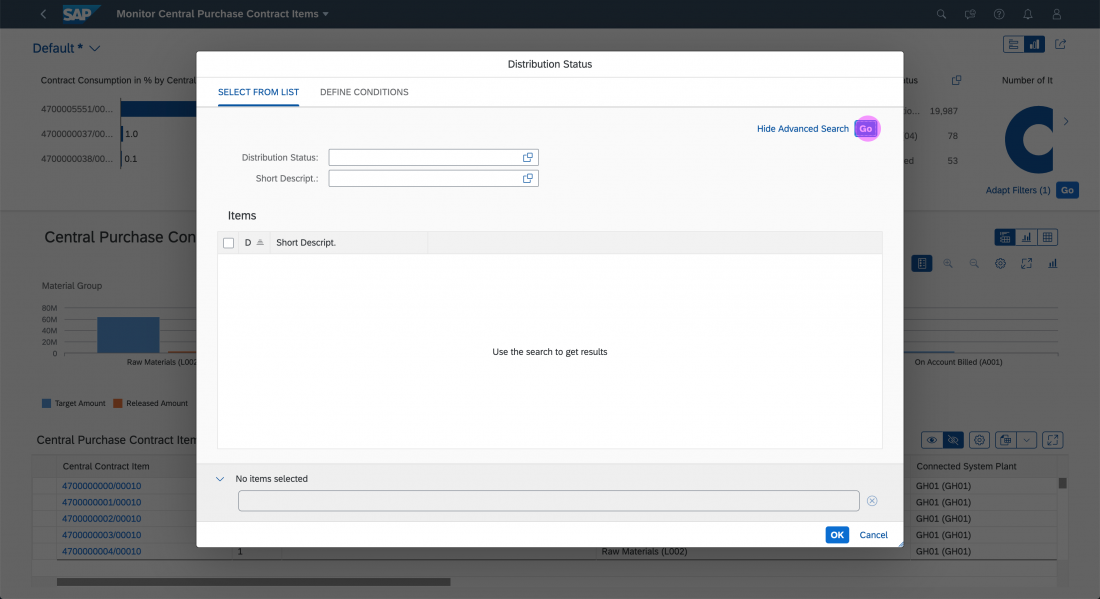

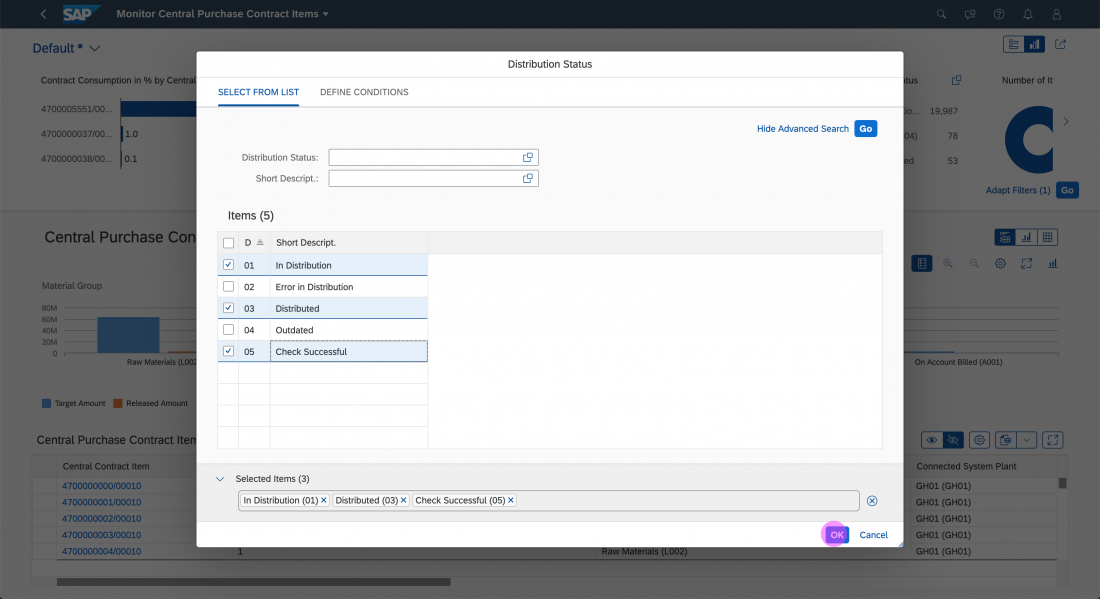

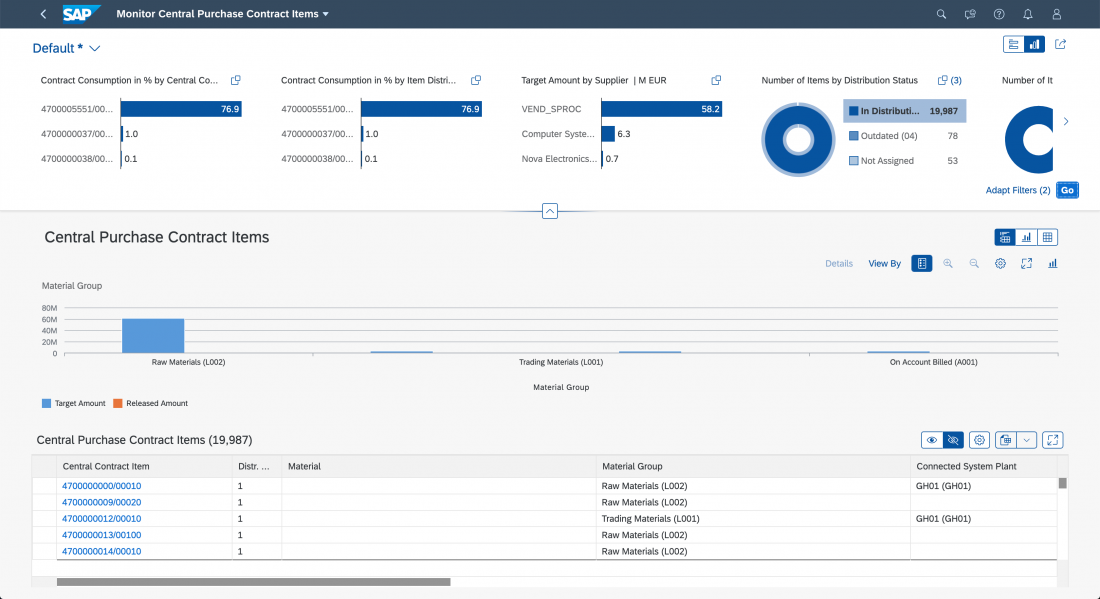

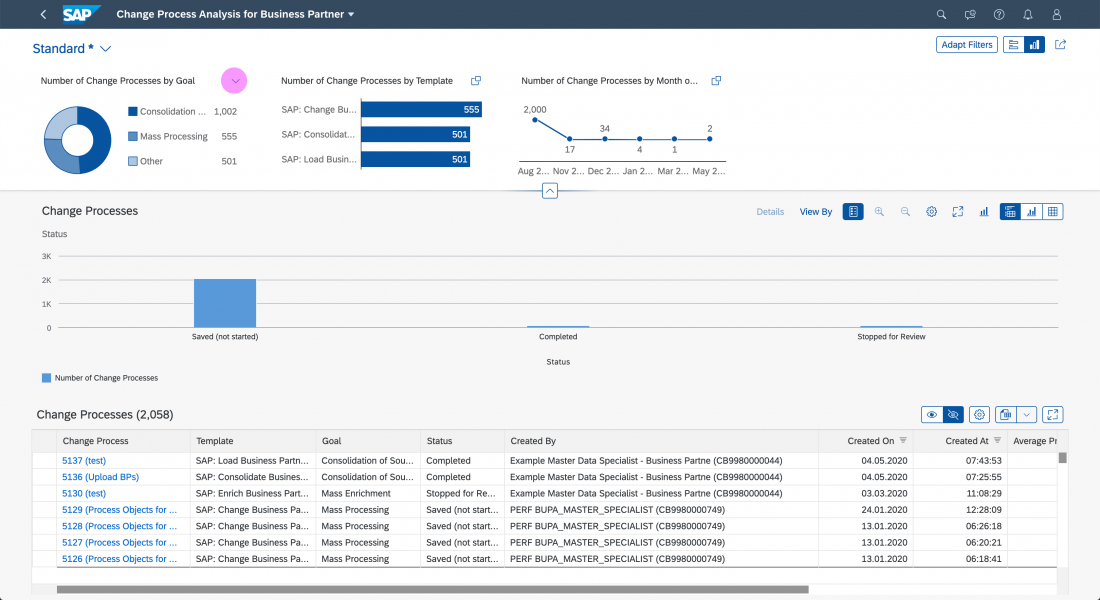

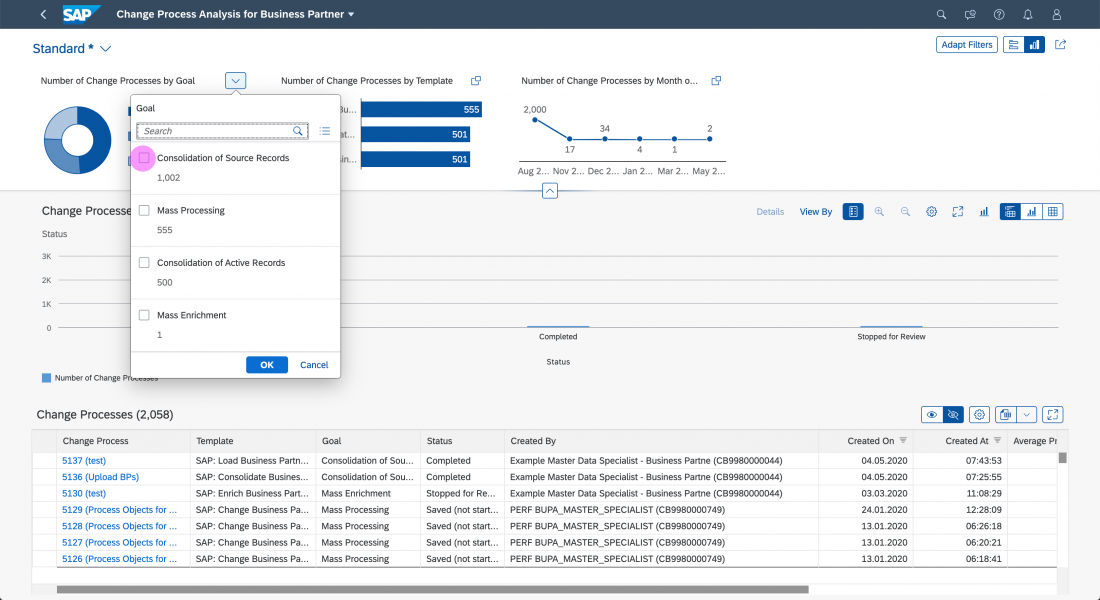

- Selecting, sorting, filtering, and grouping the AI results

- Rating the AI results

- Searching the AI results

All of these interactions can be tracked implicitly for retraining to display refined and optimized AI results.

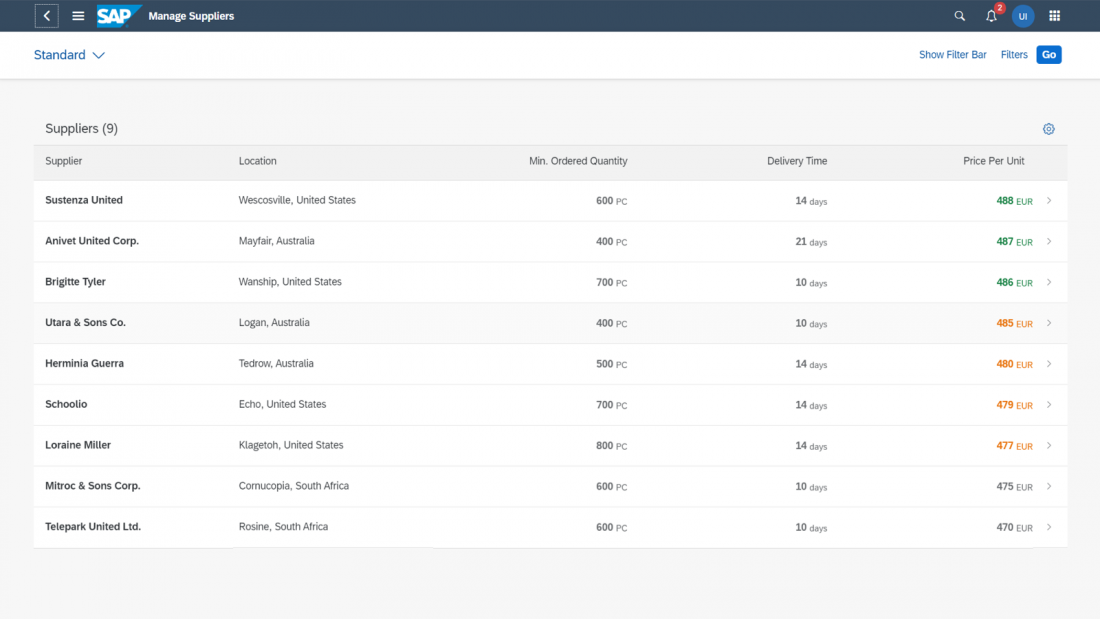

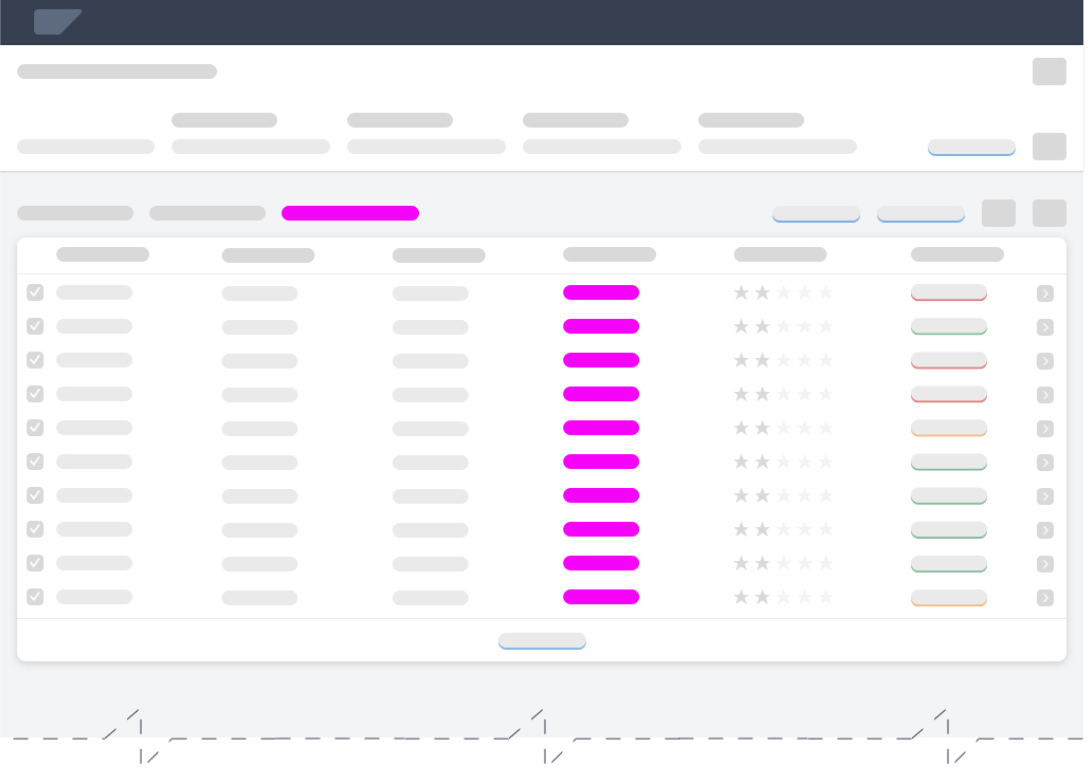

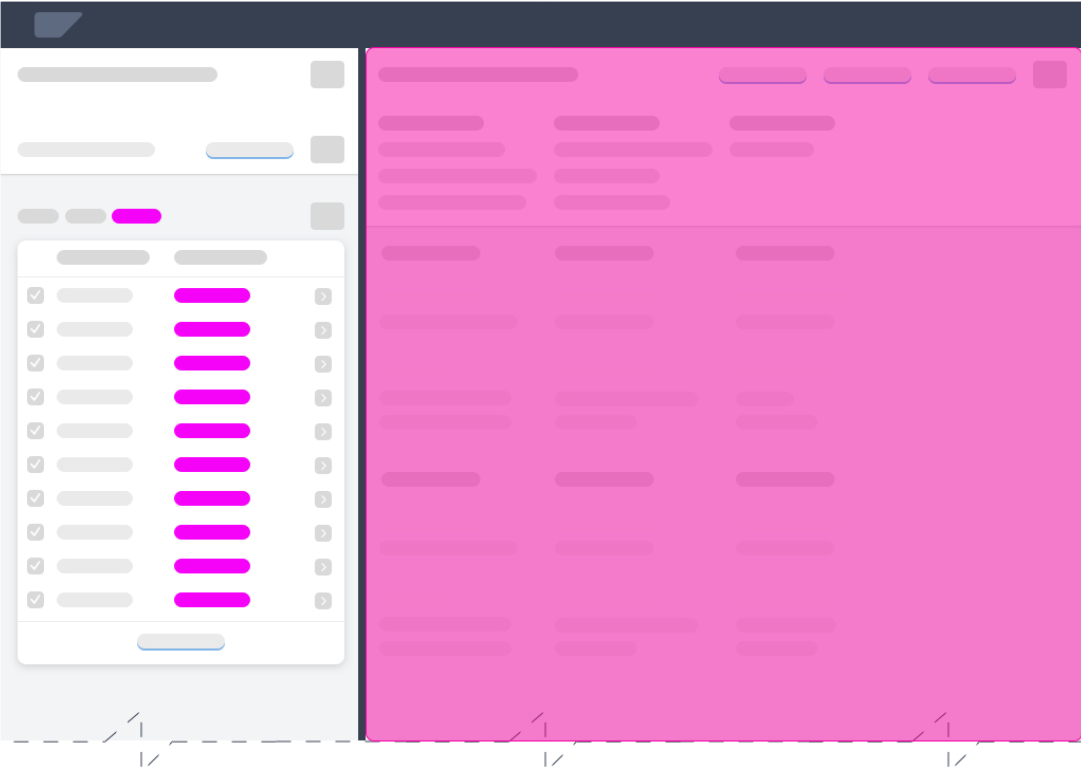

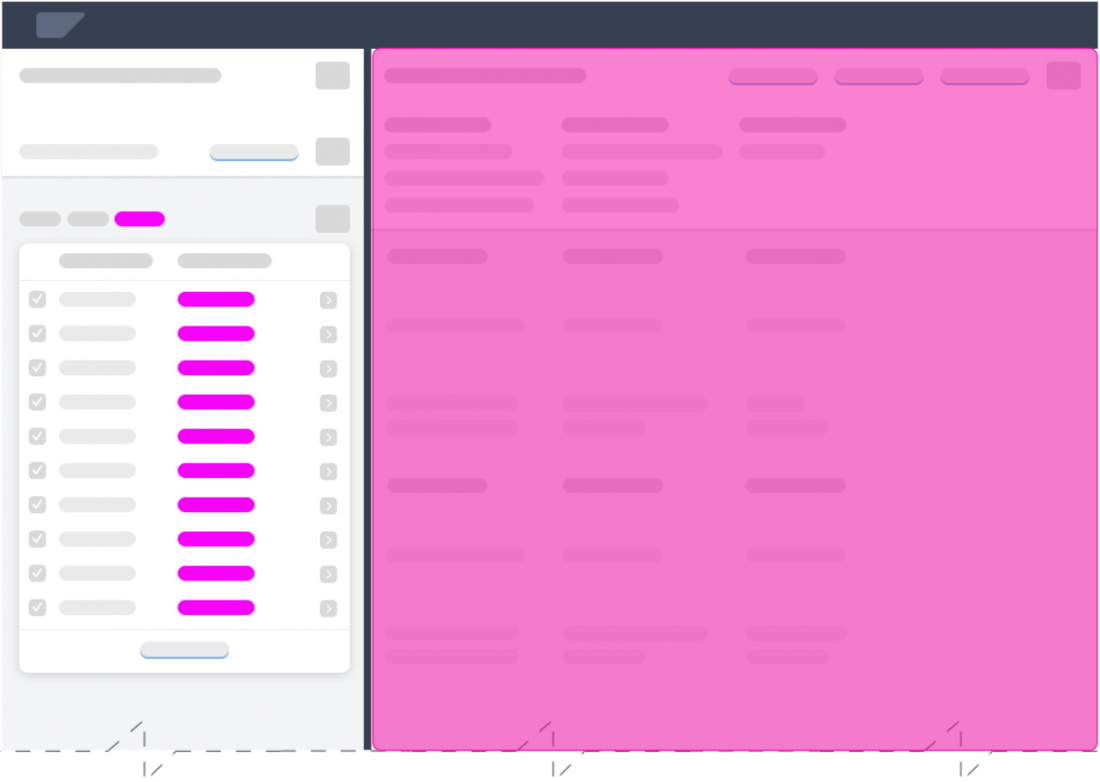

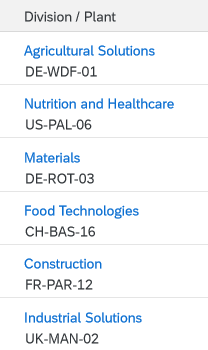

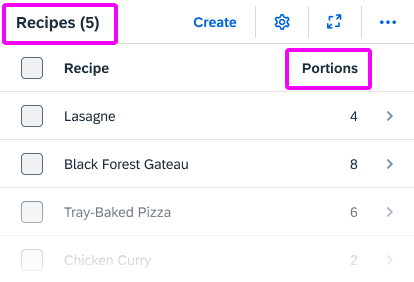

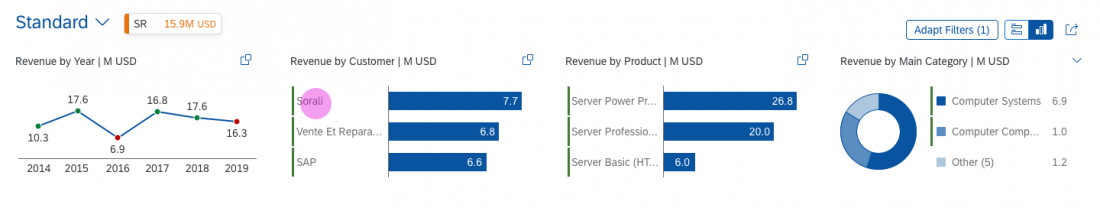

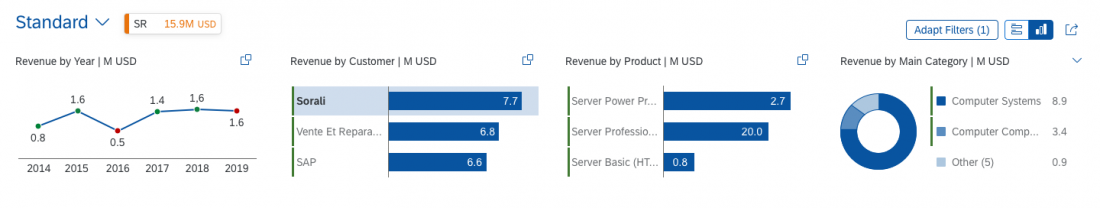

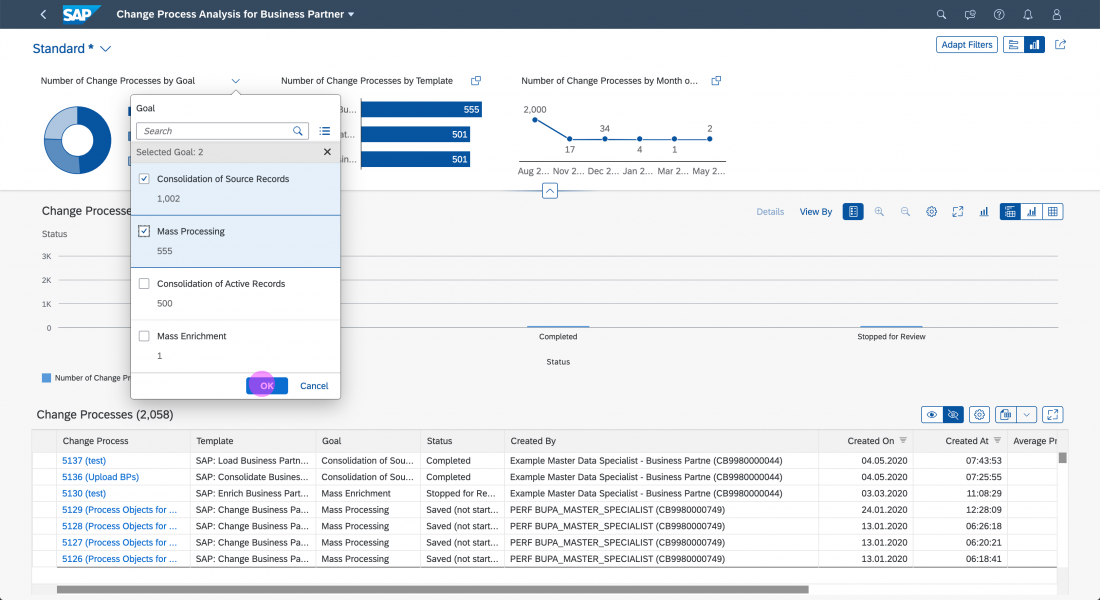

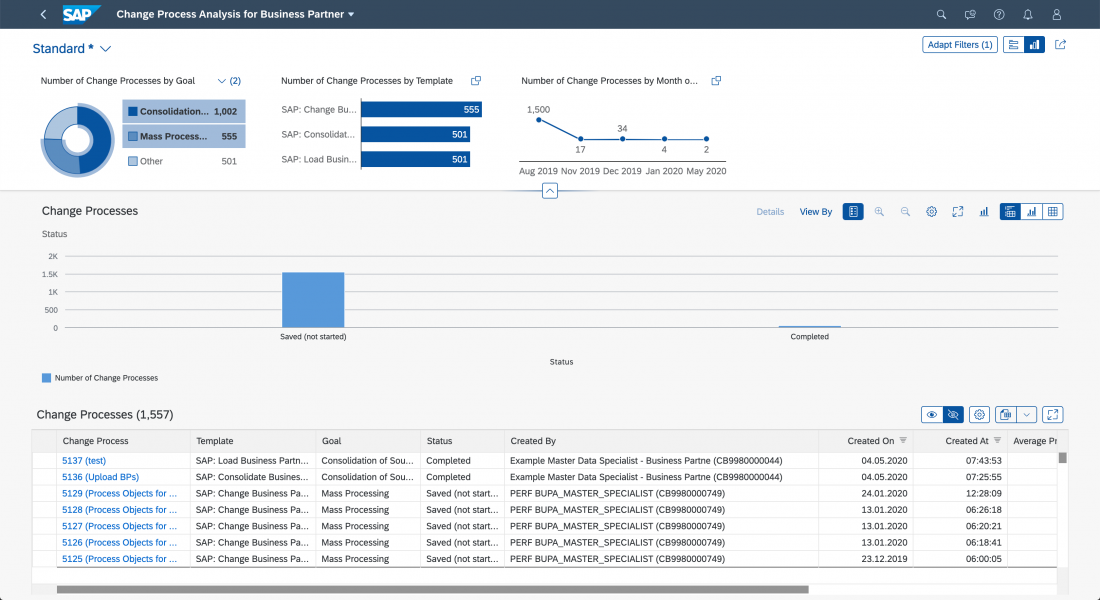

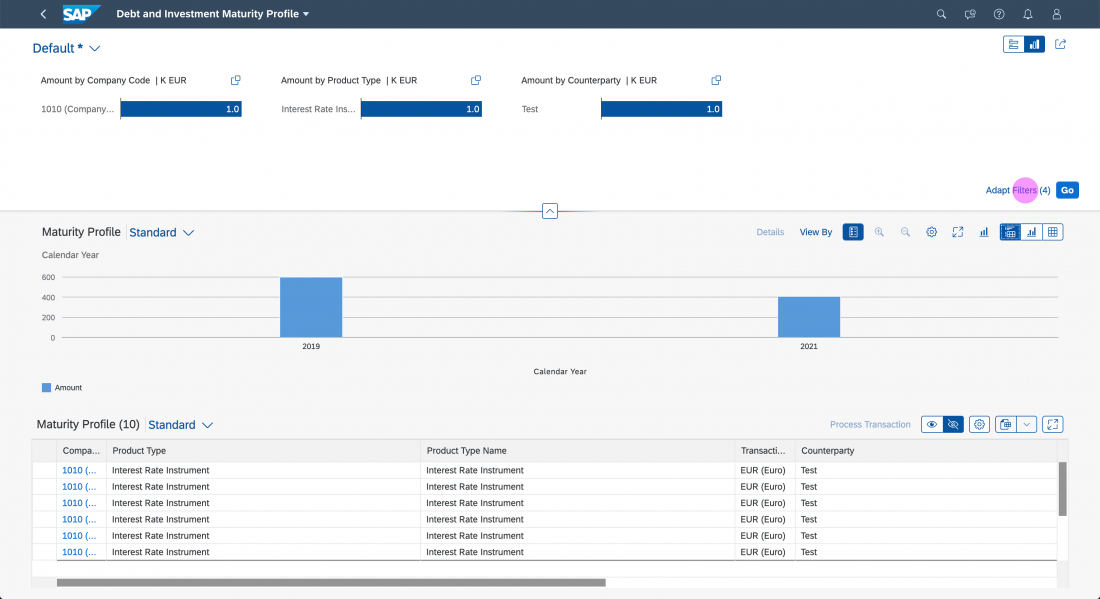

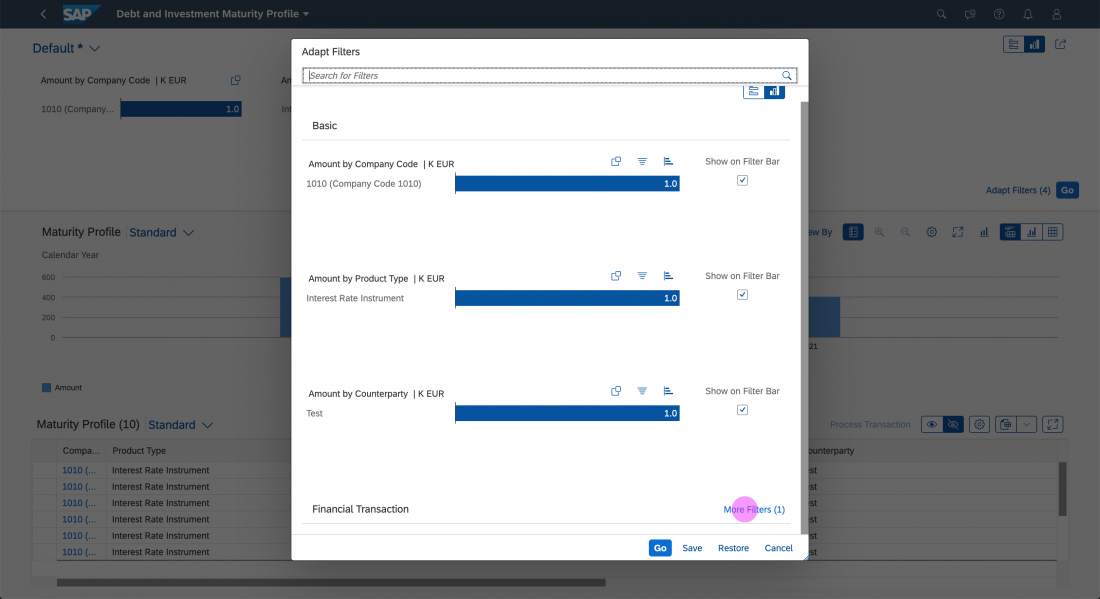

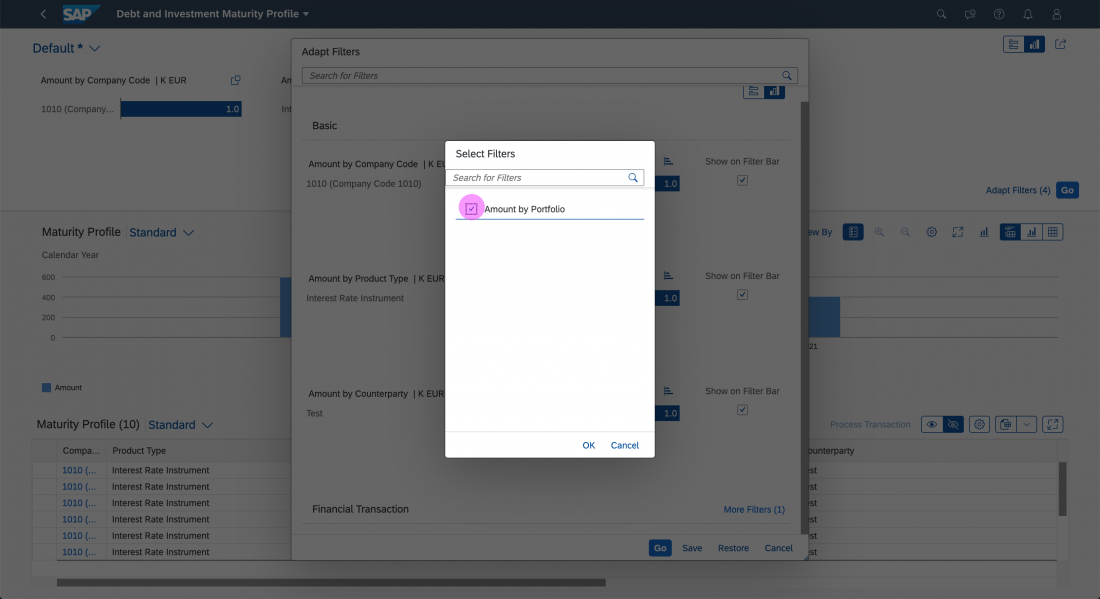

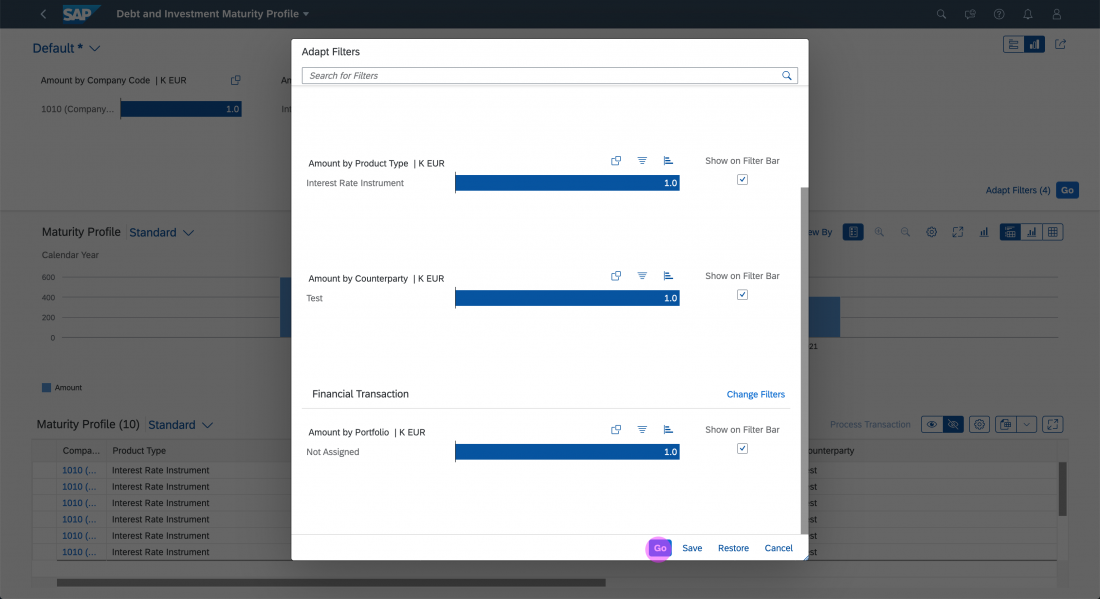

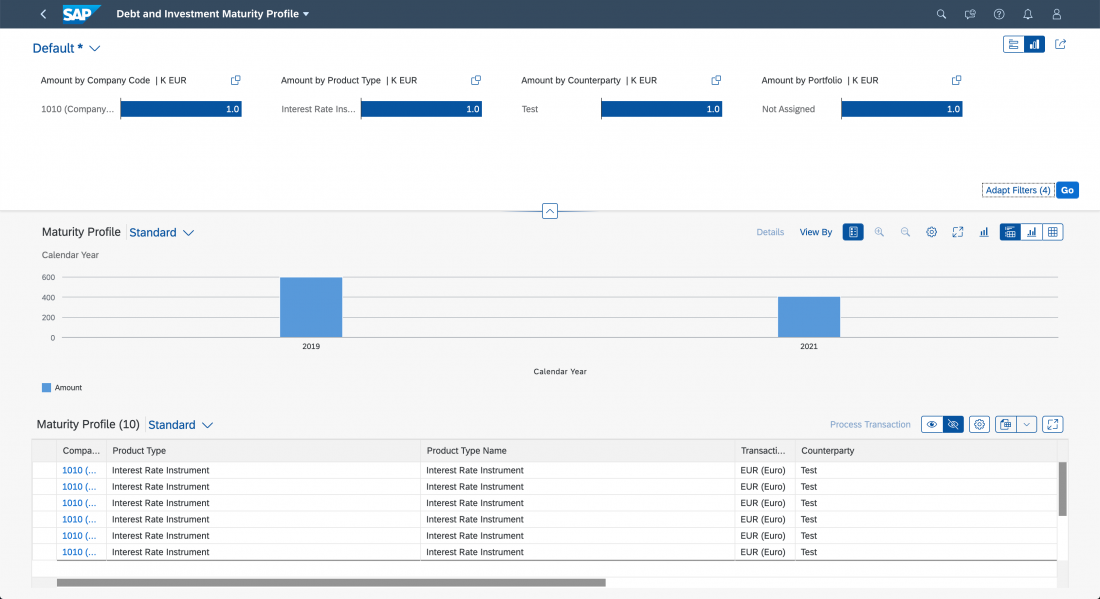

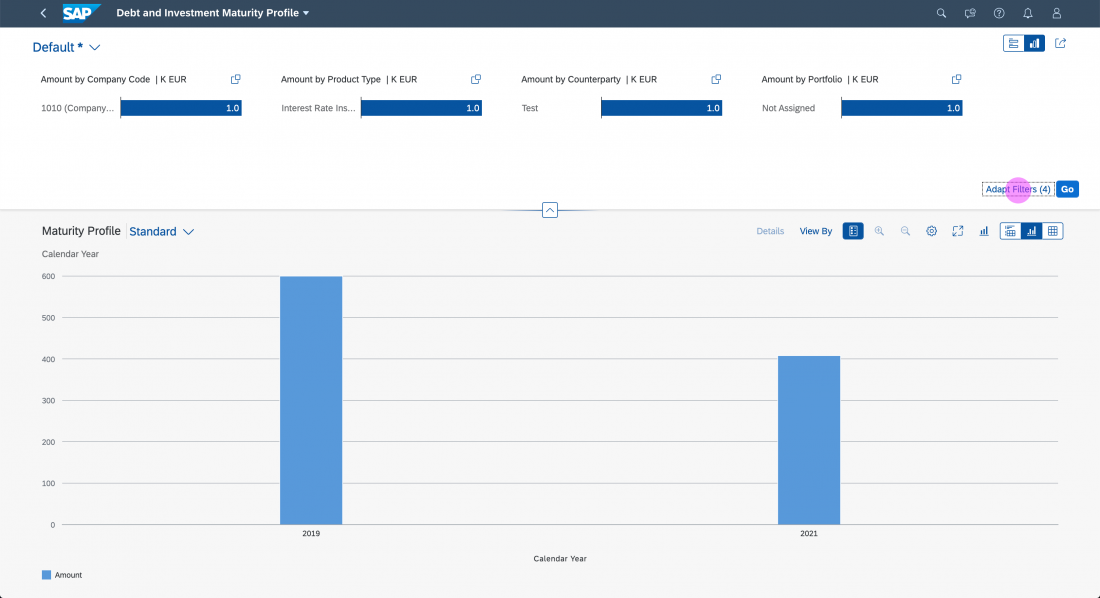

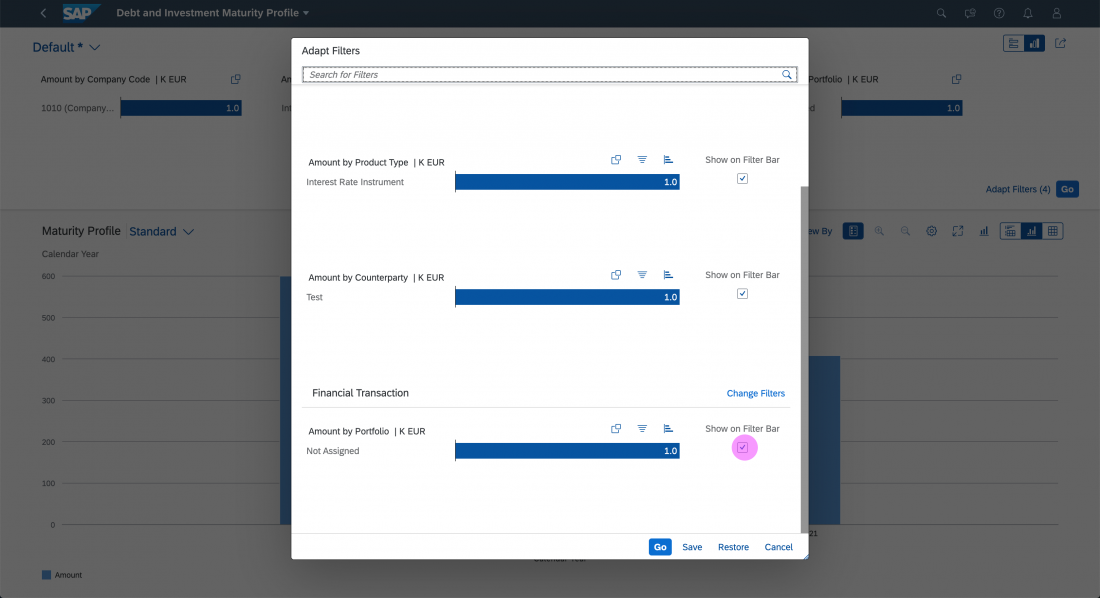

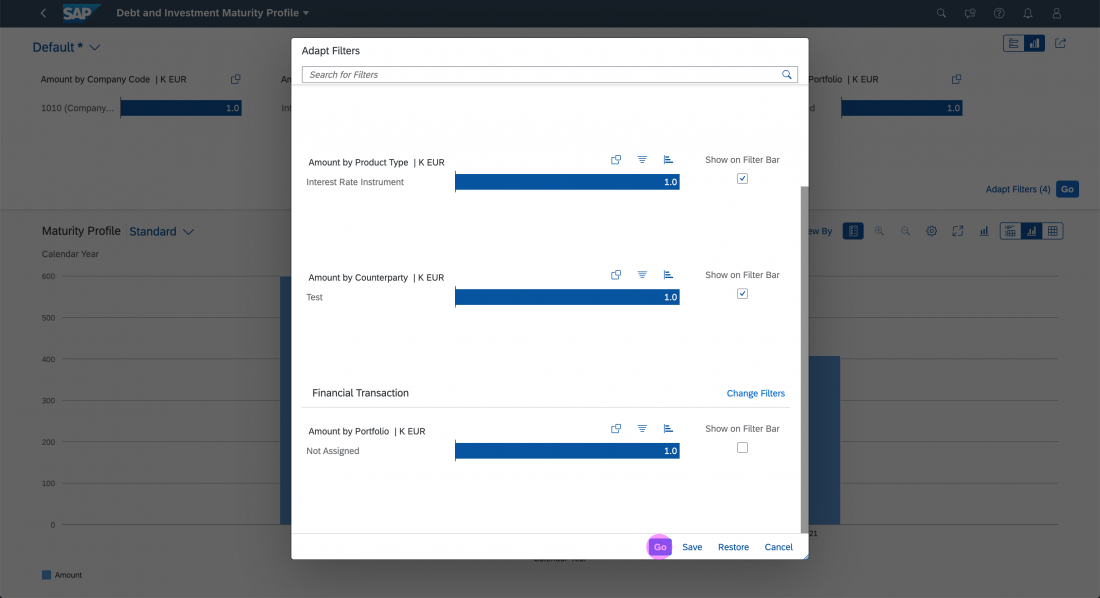

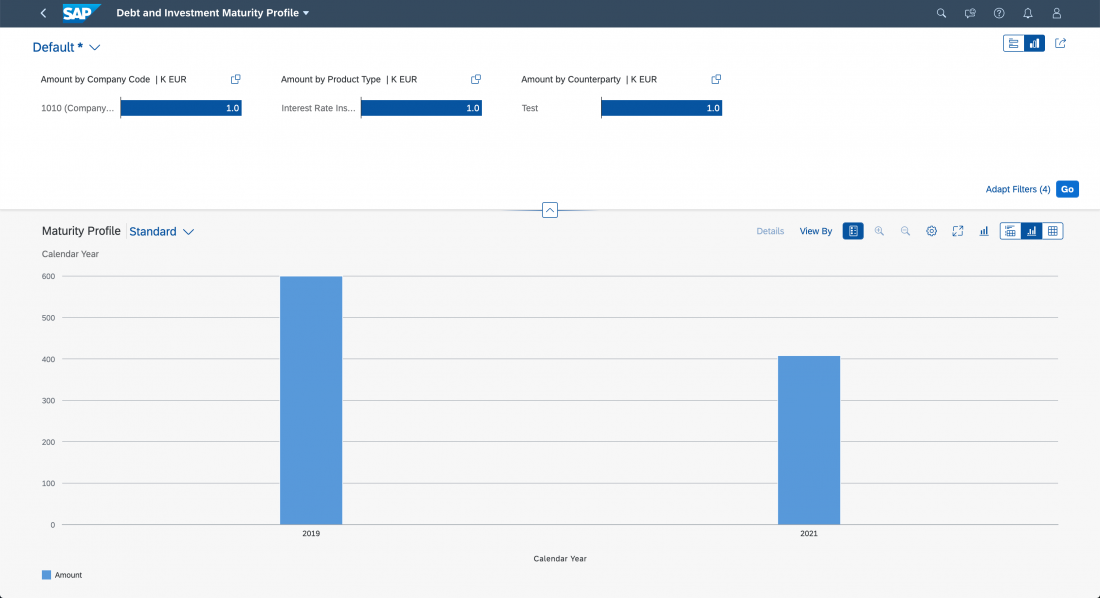

Selecting, sorting, filtering, and grouping the AI results

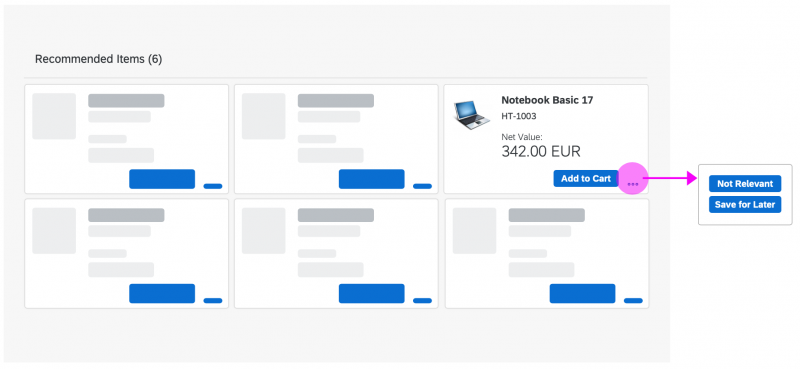

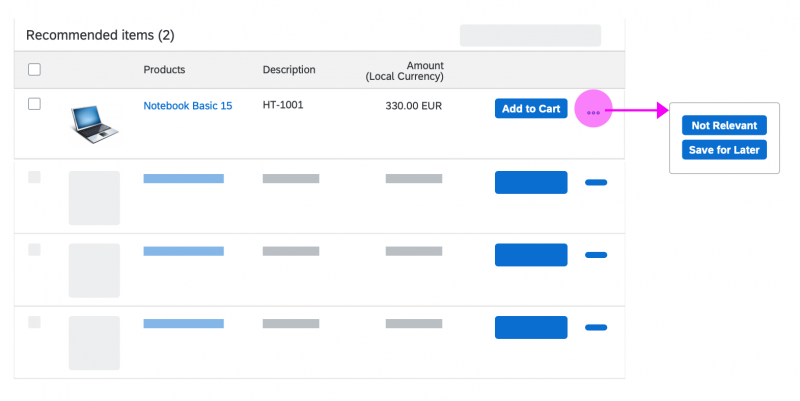

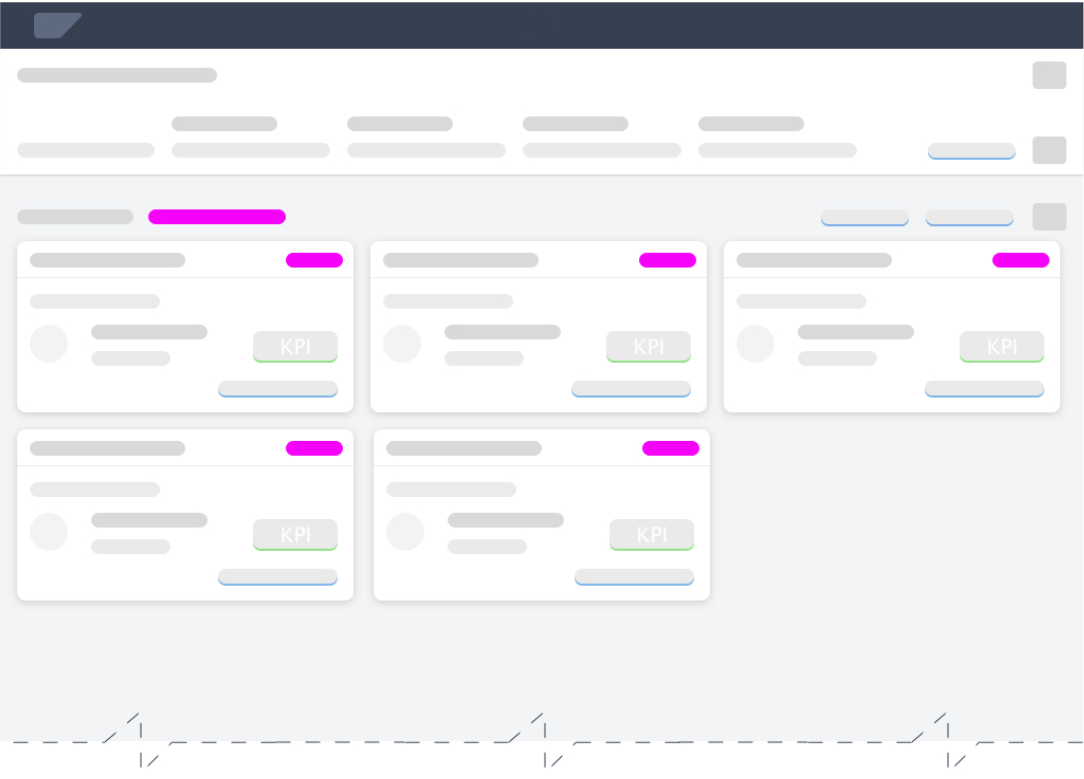

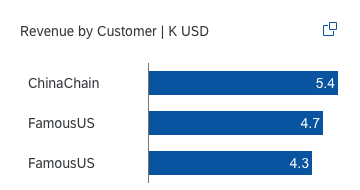

Users can personalize AI results by selecting, sorting, or filtering items. They can also perform actions to group the results, such as:

- Add to cart: The user moves the item to a more focused list, which will be processed soon.

- Not relevant: The user isn’t interested in the item determined by the AI but would like to see the item in the future in other contexts.

- Save for later: The item isn’t relevant in the current context but is an interesting find. The user moves it to a less focused list to revisit it later.

Here, you can use a grid list to display the recommended list of items. Each block contains one item, with enough details for user to make a decision. To collect local feedback, you can place a primary action button in the block to record the user’s actions. If you need to accommodate more actions, use an overflow menu.

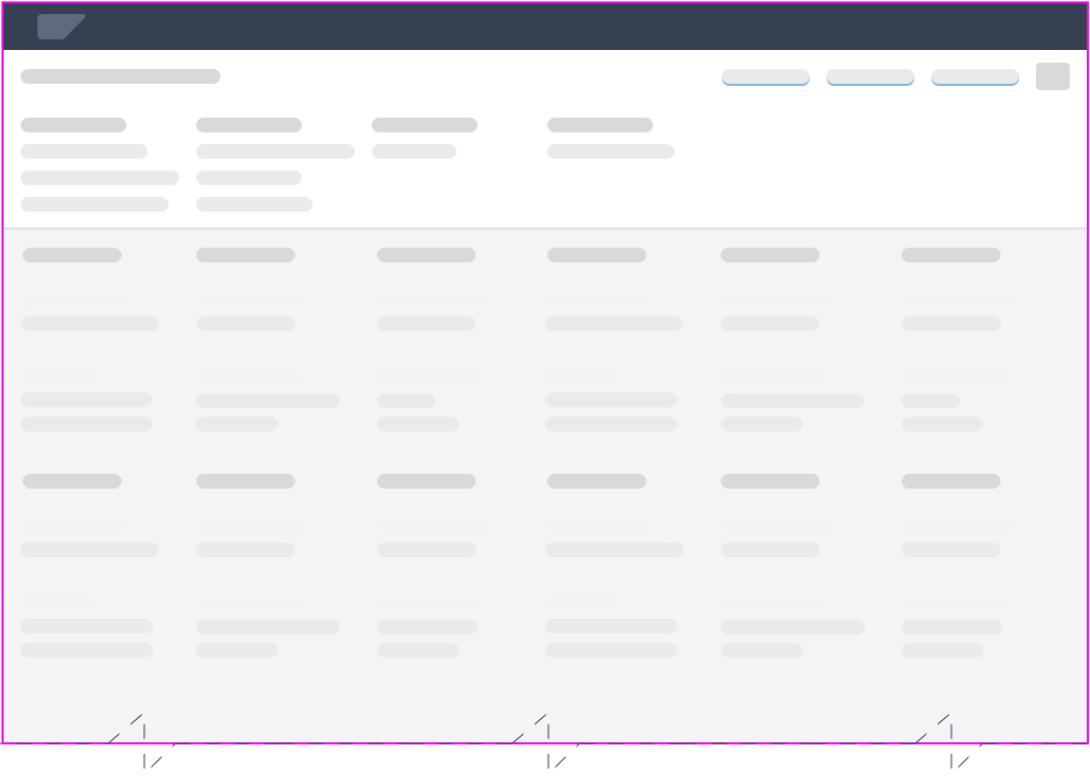

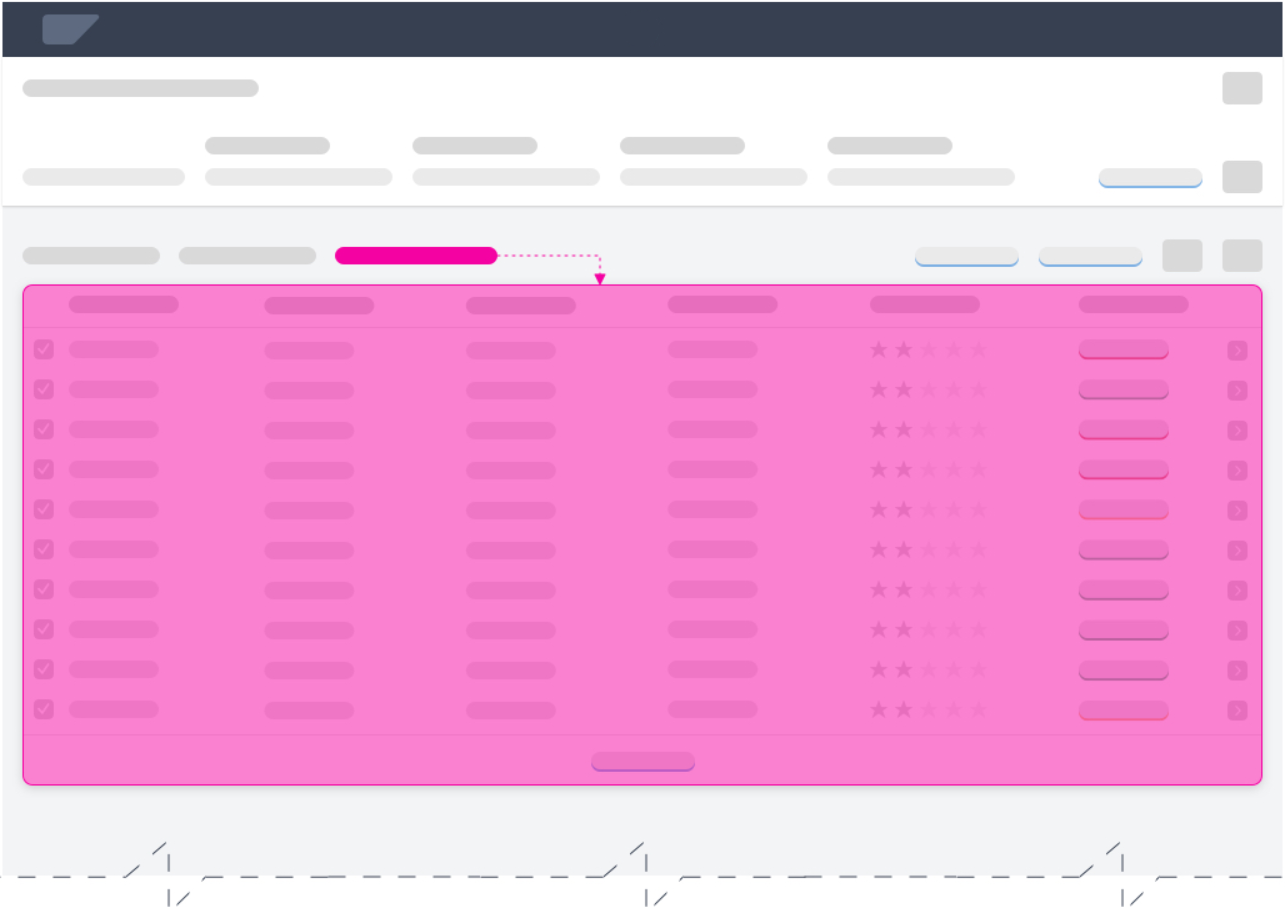

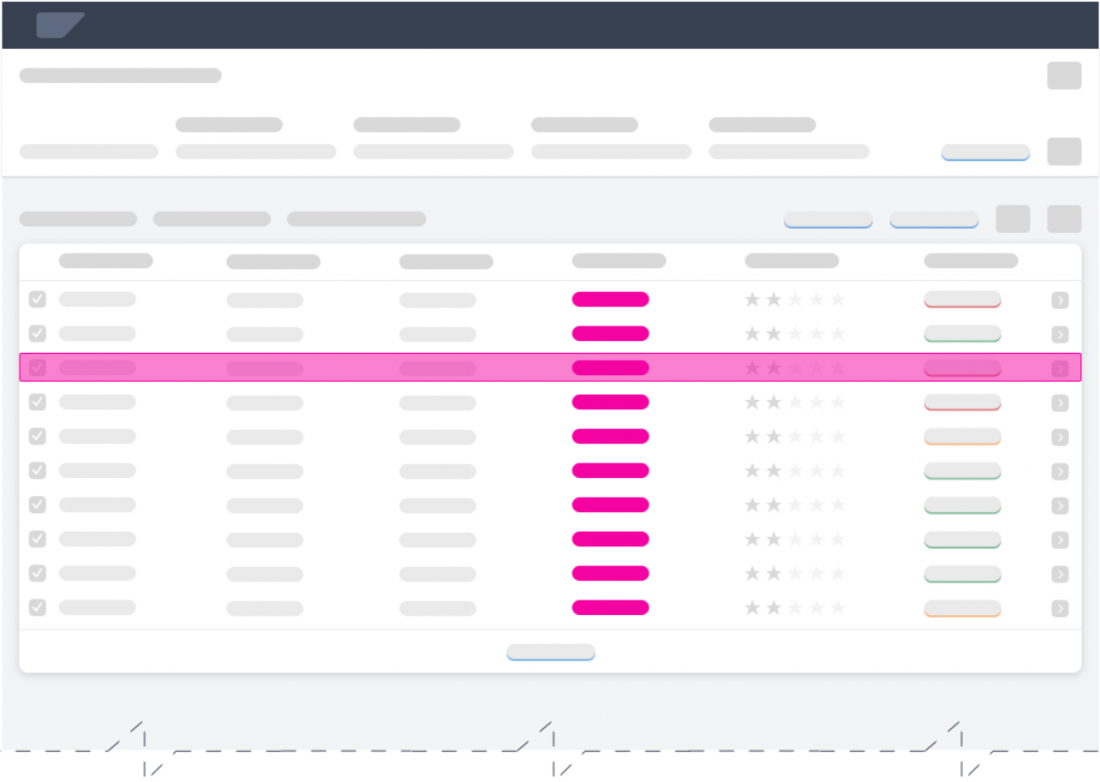

Alternatively, you can use a responsive table to display the list of recommended items. In this case, you can offer a primary action button at line item level to record user actions. If you need to accommodate more actions, use an overflow menu.

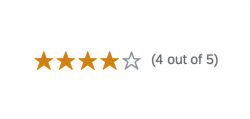

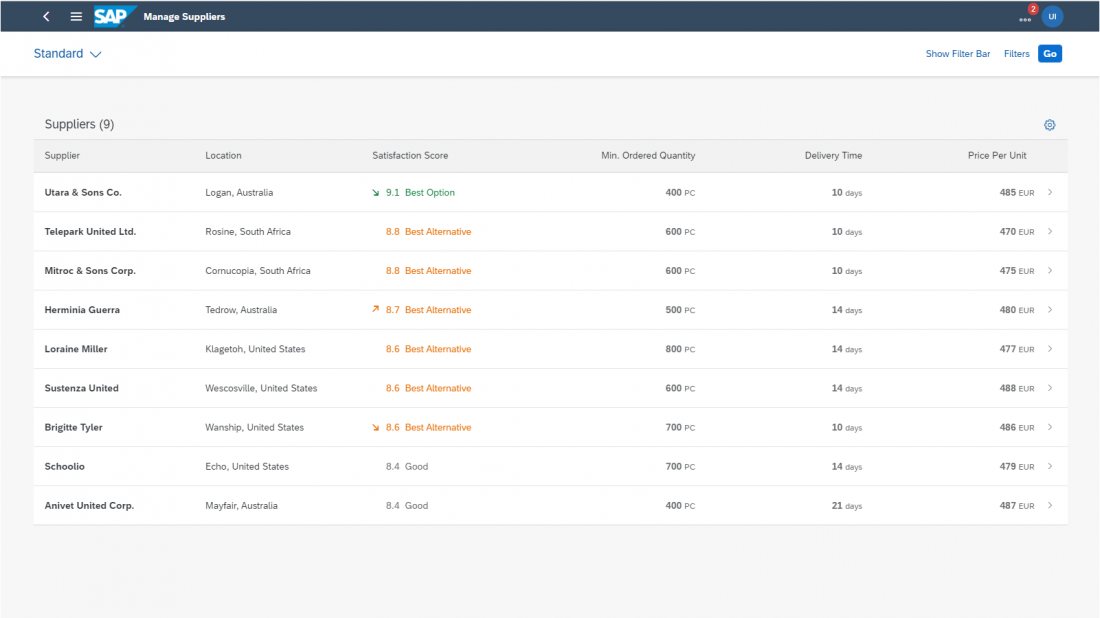

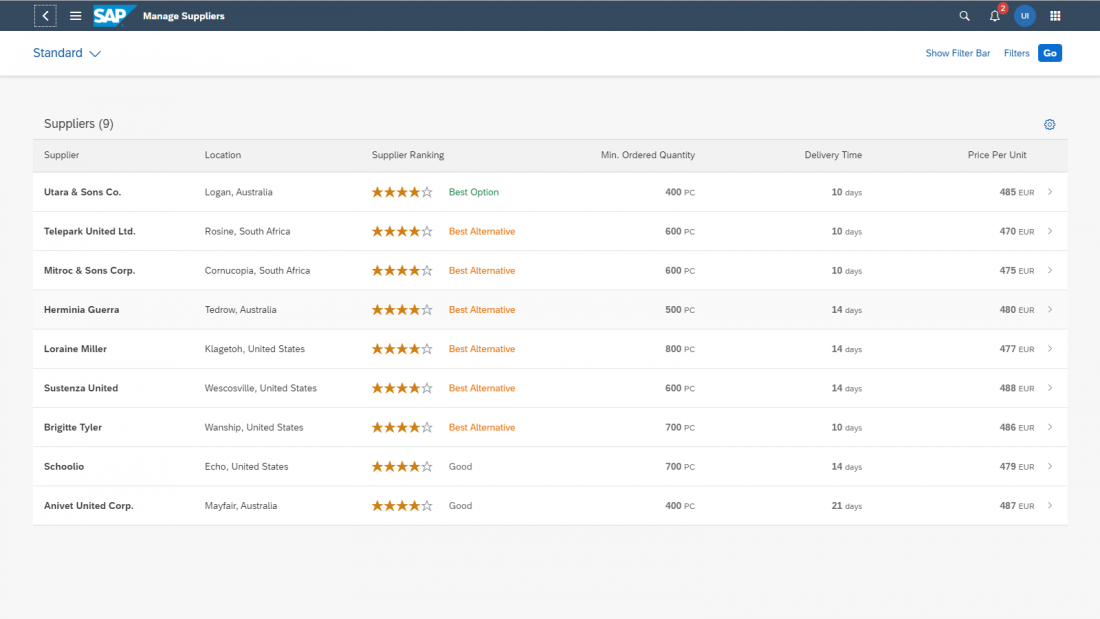

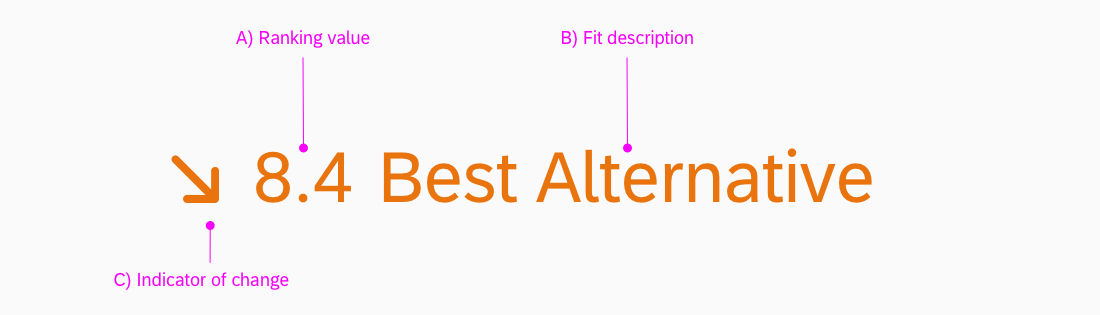

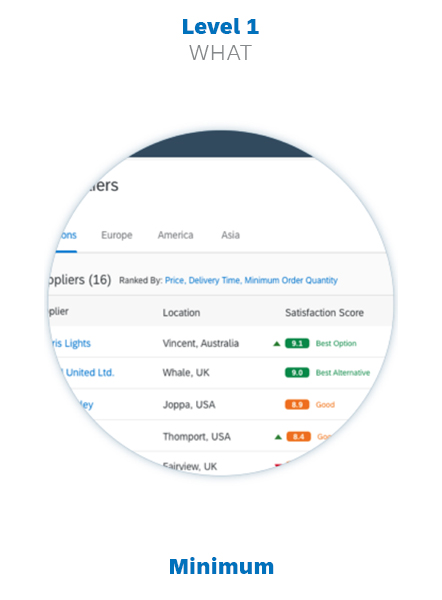

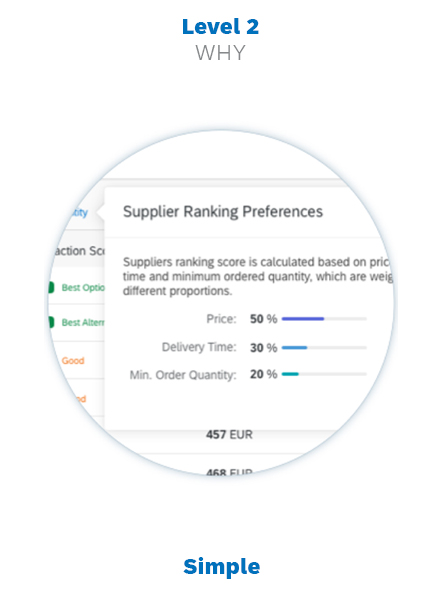

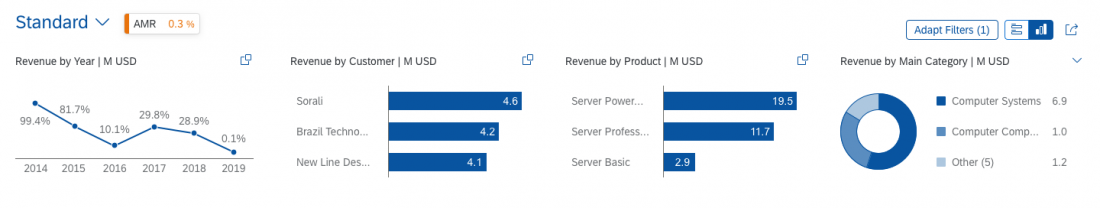

Rating the AI results

You can give users the option to prioritize rate AI results using a rating indicator. The rating can then be used to determine the usefulness of individual AI results, based on a numeric scale for all the results (highest to lowest).

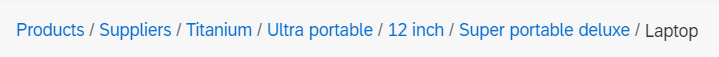

Searching the AI results

When a user searches for a specific item in a list of AI results, the system can use the search information to return similar results or optimize the order of results based on user’s interests and search history.

Measure Observable Behavior

Once a user starts examining the AI results, the system can learn by observing the user’s behavior as they browse through the results. The system can track a variety of interaction variables, known collectively as clickstream data analysis. This includes tracking viewed items, browsing patterns, items retained for future reference, items recommended to a friend, the time spent on individual tasks, and more. Insights derived from clickstream data analysis are used to improve the quality of future AI output.

Since this feedback is entirely implicit, no UI interactions are defined for this pattern. It is up to individual application domains to explore how clickstream data analysis could feed into their own AI models.

Note: This implicit feedback collection method is not transparent to end users and is likely to require advance user consent. Users are more likely to trust and accept SAP intelligence if you always ask for explicit user consent before collecting feedback data and using it to optimize AI results.

Your feedback has been sent to the SAP Fiori design team.

Your feedback has been sent to the SAP Fiori design team.